mirror of

https://github.com/THU-MIG/yolov10.git

synced 2026-01-05 06:15:28 +08:00

Add distance calculation feature in vision-eye (#8616)

Signed-off-by: Glenn Jocher <glenn.jocher@ultralytics.com> Co-authored-by: Muhammad Rizwan Munawar <chr043416@gmail.com> Co-authored-by: UltralyticsAssistant <web@ultralytics.com>

This commit is contained in:

parent

36408c974c

commit

906b8d31dc

@ -12,10 +12,10 @@ keywords: Ultralytics, YOLOv8, Object Detection, Object Tracking, IDetection, Vi

|

||||

|

||||

## Samples

|

||||

|

||||

| VisionEye View | VisionEye View With Object Tracking |

|

||||

|:------------------------------------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

|

||||

|  |  |

|

||||

| VisionEye View Object Mapping using Ultralytics YOLOv8 | VisionEye View Object Mapping with Object Tracking using Ultralytics YOLOv8 |

|

||||

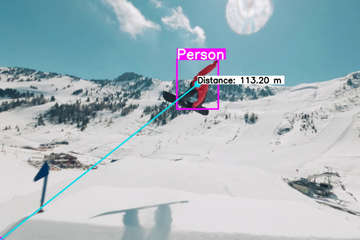

| VisionEye View | VisionEye View With Object Tracking | VisionEye View With Distance Calculation |

|

||||

|:------------------------------------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|:-------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

|

||||

|  |  |  |

|

||||

| VisionEye View Object Mapping using Ultralytics YOLOv8 | VisionEye View Object Mapping with Object Tracking using Ultralytics YOLOv8 | VisionEye View with Distance Calculation using Ultralytics YOLOv8 |

|

||||

|

||||

!!! Example "VisionEye Object Mapping using YOLOv8"

|

||||

|

||||

@ -105,6 +105,63 @@ keywords: Ultralytics, YOLOv8, Object Detection, Object Tracking, IDetection, Vi

|

||||

cap.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "VisionEye with Distance Calculation"

|

||||

|

||||

```python

|

||||

import cv2

|

||||

import math

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.utils.plotting import Annotator, colors

|

||||

|

||||

model = YOLO("yolov8s.pt")

|

||||

cap = cv2.VideoCapture("Path/to/video/file.mp4")

|

||||

|

||||

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

|

||||

|

||||

out = cv2.VideoWriter('visioneye-distance-calculation.avi', cv2.VideoWriter_fourcc(*'MJPG'), fps, (w, h))

|

||||

|

||||

center_point = (0, h)

|

||||

pixel_per_meter = 10

|

||||

|

||||

txt_color, txt_background, bbox_clr = ((0, 0, 0), (255, 255, 255), (255, 0, 255))

|

||||

|

||||

while True:

|

||||

ret, im0 = cap.read()

|

||||

if not ret:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

annotator = Annotator(im0, line_width=2)

|

||||

|

||||

results = model.track(im0, persist=True)

|

||||

boxes = results[0].boxes.xyxy.cpu()

|

||||

|

||||

if results[0].boxes.id is not None:

|

||||

track_ids = results[0].boxes.id.int().cpu().tolist()

|

||||

|

||||

for box, track_id in zip(boxes, track_ids):

|

||||

annotator.box_label(box, label=str(track_id), color=bbox_clr)

|

||||

annotator.visioneye(box, center_point)

|

||||

|

||||

x1, y1 = int((box[0] + box[2]) // 2), int((box[1] + box[3]) // 2) # Bounding box centroid

|

||||

|

||||

distance = (math.sqrt((x1 - center_point[0]) ** 2 + (y1 - center_point[1]) ** 2))/pixel_per_meter

|

||||

|

||||

text_size, _ = cv2.getTextSize(f"Distance: {distance:.2f} m", cv2.FONT_HERSHEY_SIMPLEX,1.2, 3)

|

||||

cv2.rectangle(im0, (x1, y1 - text_size[1] - 10),(x1 + text_size[0] + 10, y1), txt_background, -1)

|

||||

cv2.putText(im0, f"Distance: {distance:.2f} m",(x1, y1 - 5), cv2.FONT_HERSHEY_SIMPLEX, 1.2,txt_color, 3)

|

||||

|

||||

out.write(im0)

|

||||

cv2.imshow("visioneye-distance-calculation", im0)

|

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'):

|

||||

break

|

||||

|

||||

out.release()

|

||||

cap.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

### `visioneye` Arguments

|

||||

|

||||

|

||||

@ -14,3 +14,7 @@ keywords: Ultralytics YOLO, YOLO, YOLO model, Model Training, Machine Learning,

|

||||

## ::: ultralytics.models.yolo.model.YOLO

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.models.yolo.model.YOLOWorld

|

||||

|

||||

<br><br>

|

||||

|

||||

@ -86,3 +86,23 @@ keywords: YOLO, Ultralytics, neural network, nn.modules.block, Proto, HGBlock, S

|

||||

## ::: ultralytics.nn.modules.block.ResNetLayer

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.block.MaxSigmoidAttnBlock

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.block.C2fAttn

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.block.ImagePoolingAttn

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.block.ContrastiveHead

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.block.BNContrastiveHead

|

||||

|

||||

<br><br>

|

||||

|

||||

@ -31,6 +31,10 @@ keywords: Ultralytics, YOLO, Detection, Pose, RTDETRDecoder, nn modules, guides

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.head.WorldDetect

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.modules.head.RTDETRDecoder

|

||||

|

||||

<br><br>

|

||||

|

||||

@ -39,6 +39,10 @@ keywords: Ultralytics, YOLO, nn tasks, DetectionModel, PoseModel, RTDETRDetectio

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.tasks.WorldModel

|

||||

|

||||

<br><br>

|

||||

|

||||

## ::: ultralytics.nn.tasks.Ensemble

|

||||

|

||||

<br><br>

|

||||

|

||||

@ -107,7 +107,7 @@ def export_formats():

|

||||

["TensorFlow Edge TPU", "edgetpu", "_edgetpu.tflite", True, False],

|

||||

["TensorFlow.js", "tfjs", "_web_model", True, False],

|

||||

["PaddlePaddle", "paddle", "_paddle_model", True, True],

|

||||

["ncnn", "ncnn", "_ncnn_model", True, True],

|

||||

["NCNN", "ncnn", "_ncnn_model", True, True],

|

||||

]

|

||||

return pandas.DataFrame(x, columns=["Format", "Argument", "Suffix", "CPU", "GPU"])

|

||||

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user