mirror of

https://github.com/THU-MIG/yolov10.git

synced 2026-01-01 03:25:39 +08:00

ultralytics 8.0.196 instance-mean Segment loss (#5285)

Co-authored-by: Andy <39454881+yermandy@users.noreply.github.com>

This commit is contained in:

parent

7517667a33

commit

e7f0658744

@ -1,7 +1,7 @@

|

|||||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||||

# Pre-commit hooks. For more information see https://github.com/pre-commit/pre-commit-hooks/blob/main/README.md

|

# Pre-commit hooks. For more information see https://github.com/pre-commit/pre-commit-hooks/blob/main/README.md

|

||||||

|

|

||||||

exclude: 'docs/'

|

# exclude: 'docs/'

|

||||||

# Define bot property if installed via https://github.com/marketplace/pre-commit-ci

|

# Define bot property if installed via https://github.com/marketplace/pre-commit-ci

|

||||||

ci:

|

ci:

|

||||||

autofix_prs: true

|

autofix_prs: true

|

||||||

@ -47,6 +47,7 @@ repos:

|

|||||||

additional_dependencies:

|

additional_dependencies:

|

||||||

- mdformat-gfm

|

- mdformat-gfm

|

||||||

- mdformat-black

|

- mdformat-black

|

||||||

|

exclude: 'docs/.*\.md'

|

||||||

# exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

|

# exclude: "README.md|README.zh-CN.md|CONTRIBUTING.md"

|

||||||

|

|

||||||

- repo: https://github.com/PyCQA/flake8

|

- repo: https://github.com/PyCQA/flake8

|

||||||

@ -60,7 +61,7 @@ repos:

|

|||||||

hooks:

|

hooks:

|

||||||

- id: codespell

|

- id: codespell

|

||||||

args:

|

args:

|

||||||

- --ignore-words-list=crate,nd,strack,dota,ane,segway,fo

|

- --ignore-words-list=crate,nd,strack,dota,ane,segway,fo,gool,winn

|

||||||

|

|

||||||

- repo: https://github.com/PyCQA/docformatter

|

- repo: https://github.com/PyCQA/docformatter

|

||||||

rev: v1.7.5

|

rev: v1.7.5

|

||||||

|

|||||||

@ -9,8 +9,7 @@ Ultralytics Docs are deployed to [https://docs.ultralytics.com](https://docs.ult

|

|||||||

|

|

||||||

### Install Ultralytics package

|

### Install Ultralytics package

|

||||||

|

|

||||||

To install the ultralytics package in developer mode, you will need to have Git and Python 3 installed on your system.

|

To install the ultralytics package in developer mode, you will need to have Git and Python 3 installed on your system. Then, follow these steps:

|

||||||

Then, follow these steps:

|

|

||||||

|

|

||||||

1. Clone the ultralytics repository to your local machine using Git:

|

1. Clone the ultralytics repository to your local machine using Git:

|

||||||

|

|

||||||

@ -30,16 +29,13 @@ cd ultralytics

|

|||||||

pip install -e ".[dev]"

|

pip install -e ".[dev]"

|

||||||

```

|

```

|

||||||

|

|

||||||

This will install the ultralytics package and its dependencies in developer mode, allowing you to make changes to the

|

This will install the ultralytics package and its dependencies in developer mode, allowing you to make changes to the package code and have them reflected immediately in your Python environment.

|

||||||

package code and have them reflected immediately in your Python environment.

|

|

||||||

|

|

||||||

Note that you may need to use the pip3 command instead of pip if you have multiple versions of Python installed on your

|

Note that you may need to use the pip3 command instead of pip if you have multiple versions of Python installed on your system.

|

||||||

system.

|

|

||||||

|

|

||||||

### Building and Serving Locally

|

### Building and Serving Locally

|

||||||

|

|

||||||

The `mkdocs serve` command is used to build and serve a local version of the MkDocs documentation site. It is typically

|

The `mkdocs serve` command is used to build and serve a local version of the MkDocs documentation site. It is typically used during the development and testing phase of a documentation project.

|

||||||

used during the development and testing phase of a documentation project.

|

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

mkdocs serve

|

mkdocs serve

|

||||||

@ -47,33 +43,23 @@ mkdocs serve

|

|||||||

|

|

||||||

Here is a breakdown of what this command does:

|

Here is a breakdown of what this command does:

|

||||||

|

|

||||||

- `mkdocs`: This is the command-line interface (CLI) for the MkDocs static site generator. It is used to build and serve

|

- `mkdocs`: This is the command-line interface (CLI) for the MkDocs static site generator. It is used to build and serve MkDocs sites.

|

||||||

MkDocs sites.

|

|

||||||

- `serve`: This is a subcommand of the `mkdocs` CLI that tells it to build and serve the documentation site locally.

|

- `serve`: This is a subcommand of the `mkdocs` CLI that tells it to build and serve the documentation site locally.

|

||||||

- `-a`: This flag specifies the hostname and port number to bind the server to. The default value is `localhost:8000`.

|

- `-a`: This flag specifies the hostname and port number to bind the server to. The default value is `localhost:8000`.

|

||||||

- `-t`: This flag specifies the theme to use for the documentation site. The default value is `mkdocs`.

|

- `-t`: This flag specifies the theme to use for the documentation site. The default value is `mkdocs`.

|

||||||

- `-s`: This flag tells the `serve` command to serve the site in silent mode, which means it will not display any log

|

- `-s`: This flag tells the `serve` command to serve the site in silent mode, which means it will not display any log messages or progress updates. When you run the `mkdocs serve` command, it will build the documentation site using the files in the `docs/` directory and serve it at the specified hostname and port number. You can then view the site by going to the URL in your web browser.

|

||||||

messages or progress updates.

|

|

||||||

When you run the `mkdocs serve` command, it will build the documentation site using the files in the `docs/` directory

|

|

||||||

and serve it at the specified hostname and port number. You can then view the site by going to the URL in your web

|

|

||||||

browser.

|

|

||||||

|

|

||||||

While the site is being served, you can make changes to the documentation files and see them reflected in the live site

|

While the site is being served, you can make changes to the documentation files and see them reflected in the live site immediately. This is useful for testing and debugging your documentation before deploying it to a live server.

|

||||||

immediately. This is useful for testing and debugging your documentation before deploying it to a live server.

|

|

||||||

|

|

||||||

To stop the serve command and terminate the local server, you can use the `CTRL+C` keyboard shortcut.

|

To stop the serve command and terminate the local server, you can use the `CTRL+C` keyboard shortcut.

|

||||||

|

|

||||||

### Deploying Your Documentation Site

|

### Deploying Your Documentation Site

|

||||||

|

|

||||||

To deploy your MkDocs documentation site, you will need to choose a hosting provider and a deployment method. Some

|

To deploy your MkDocs documentation site, you will need to choose a hosting provider and a deployment method. Some popular options include GitHub Pages, GitLab Pages, and Amazon S3.

|

||||||

popular options include GitHub Pages, GitLab Pages, and Amazon S3.

|

|

||||||

|

|

||||||

Before you can deploy your site, you will need to configure your `mkdocs.yml` file to specify the remote host and any

|

Before you can deploy your site, you will need to configure your `mkdocs.yml` file to specify the remote host and any other necessary deployment settings.

|

||||||

other necessary deployment settings.

|

|

||||||

|

|

||||||

Once you have configured your `mkdocs.yml` file, you can use the `mkdocs deploy` command to build and deploy your site.

|

Once you have configured your `mkdocs.yml` file, you can use the `mkdocs deploy` command to build and deploy your site. This command will build the documentation site using the files in the `docs/` directory and the specified configuration file and theme, and then deploy the site to the specified remote host.

|

||||||

This command will build the documentation site using the files in the `docs/` directory and the specified configuration

|

|

||||||

file and theme, and then deploy the site to the specified remote host.

|

|

||||||

|

|

||||||

For example, to deploy your site to GitHub Pages using the gh-deploy plugin, you can use the following command:

|

For example, to deploy your site to GitHub Pages using the gh-deploy plugin, you can use the following command:

|

||||||

|

|

||||||

@ -81,10 +67,8 @@ For example, to deploy your site to GitHub Pages using the gh-deploy plugin, you

|

|||||||

mkdocs gh-deploy

|

mkdocs gh-deploy

|

||||||

```

|

```

|

||||||

|

|

||||||

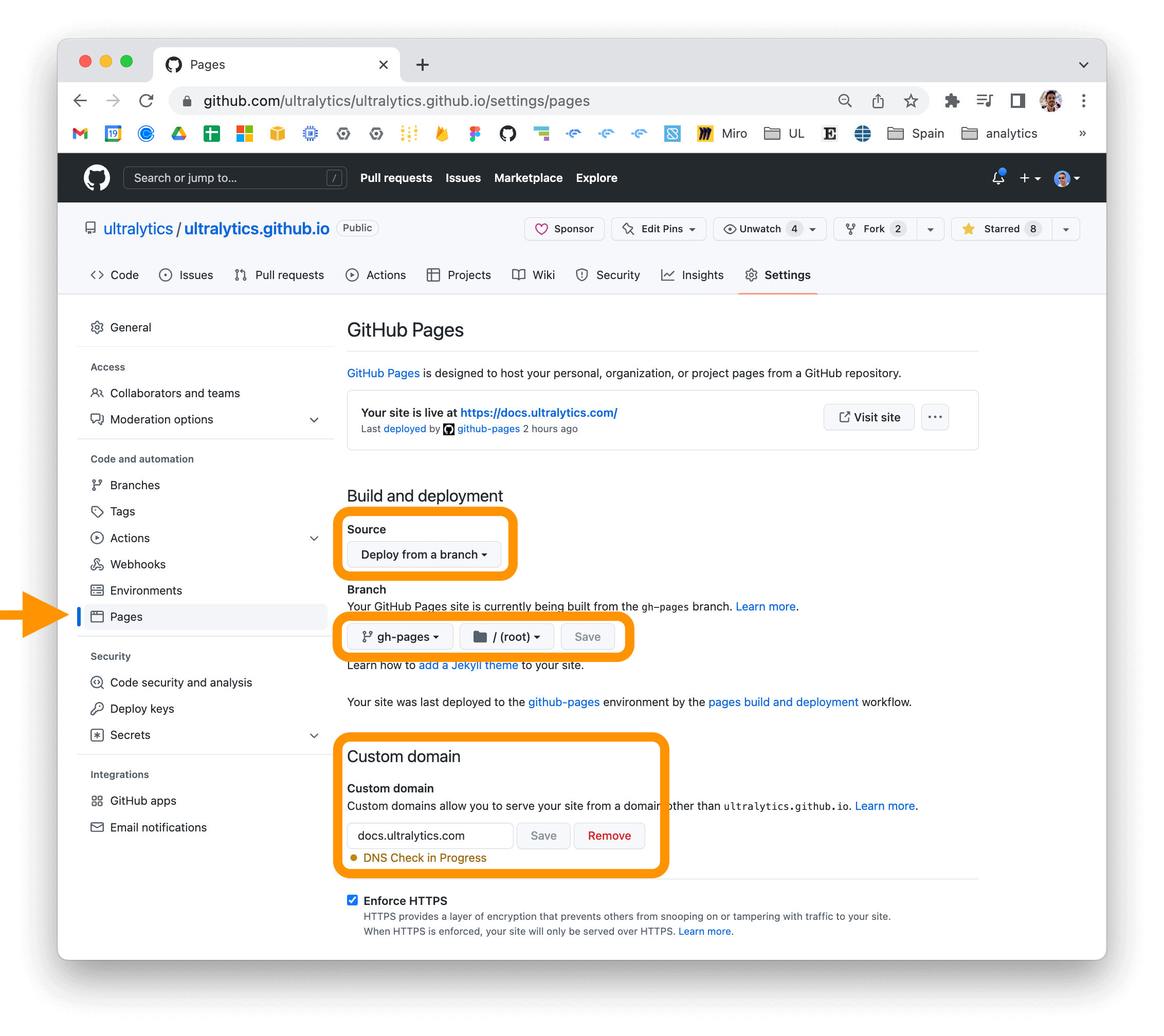

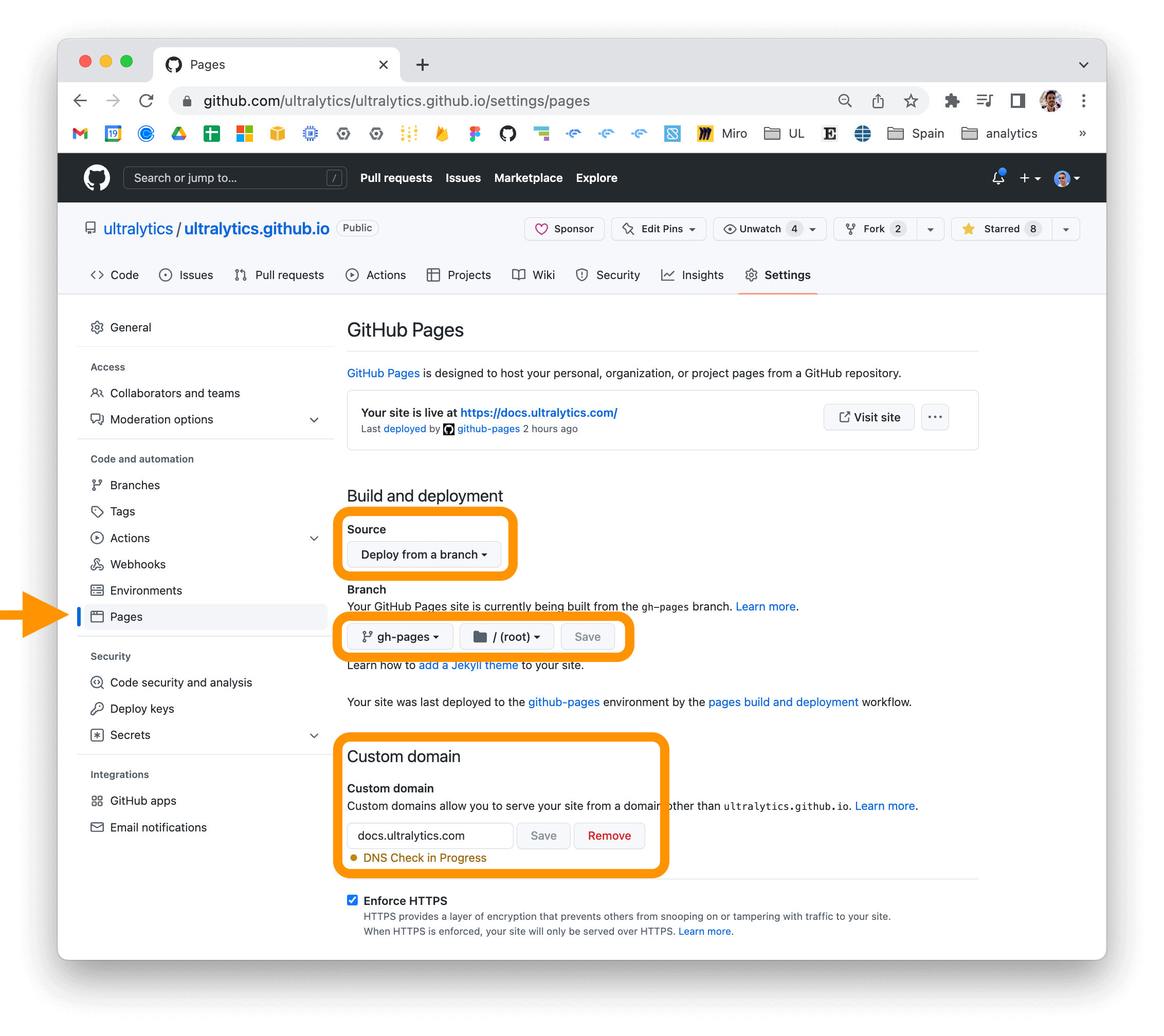

If you are using GitHub Pages, you can set a custom domain for your documentation site by going to the "Settings" page

|

If you are using GitHub Pages, you can set a custom domain for your documentation site by going to the "Settings" page for your repository and updating the "Custom domain" field in the "GitHub Pages" section.

|

||||||

for your repository and updating the "Custom domain" field in the "GitHub Pages" section.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

For more information on deploying your MkDocs documentation site, see

|

For more information on deploying your MkDocs documentation site, see the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/).

|

||||||

the [MkDocs documentation](https://www.mkdocs.org/user-guide/deploying-your-docs/).

|

|

||||||

|

|||||||

@ -19,7 +19,8 @@ REFERENCE_DIR = ROOT.parent / 'docs/reference'

|

|||||||

|

|

||||||

|

|

||||||

def extract_classes_and_functions(filepath: Path):

|

def extract_classes_and_functions(filepath: Path):

|

||||||

"""Extracts class and function names from a given Python file.

|

"""

|

||||||

|

Extracts class and function names from a given Python file.

|

||||||

|

|

||||||

Args:

|

Args:

|

||||||

filepath (Path): The path to the Python file.

|

filepath (Path): The path to the Python file.

|

||||||

@ -27,9 +28,7 @@ def extract_classes_and_functions(filepath: Path):

|

|||||||

Returns:

|

Returns:

|

||||||

(tuple): A tuple containing lists of class and function names.

|

(tuple): A tuple containing lists of class and function names.

|

||||||

"""

|

"""

|

||||||

with open(filepath, 'r') as file:

|

content = Path(filepath).read_text()

|

||||||

content = file.read()

|

|

||||||

|

|

||||||

class_pattern = r'(?:^|\n)class\s(\w+)(?:\(|:)'

|

class_pattern = r'(?:^|\n)class\s(\w+)(?:\(|:)'

|

||||||

func_pattern = r'(?:^|\n)def\s(\w+)\('

|

func_pattern = r'(?:^|\n)def\s(\w+)\('

|

||||||

|

|

||||||

@ -40,7 +39,8 @@ def extract_classes_and_functions(filepath: Path):

|

|||||||

|

|

||||||

|

|

||||||

def create_markdown(py_filepath: Path, module_path: str, classes: list, functions: list):

|

def create_markdown(py_filepath: Path, module_path: str, classes: list, functions: list):

|

||||||

"""Creates a Markdown file containing the API reference for the given Python module.

|

"""

|

||||||

|

Creates a Markdown file containing the API reference for the given Python module.

|

||||||

|

|

||||||

Args:

|

Args:

|

||||||

py_filepath (Path): The path to the Python file.

|

py_filepath (Path): The path to the Python file.

|

||||||

@ -53,7 +53,7 @@ def create_markdown(py_filepath: Path, module_path: str, classes: list, function

|

|||||||

# Read existing content and keep header content between first two ---

|

# Read existing content and keep header content between first two ---

|

||||||

header_content = ''

|

header_content = ''

|

||||||

if md_filepath.exists():

|

if md_filepath.exists():

|

||||||

with open(md_filepath, 'r') as file:

|

with open(md_filepath) as file:

|

||||||

existing_content = file.read()

|

existing_content = file.read()

|

||||||

header_parts = existing_content.split('---')

|

header_parts = existing_content.split('---')

|

||||||

for part in header_parts:

|

for part in header_parts:

|

||||||

@ -61,11 +61,13 @@ def create_markdown(py_filepath: Path, module_path: str, classes: list, function

|

|||||||

header_content += f'---{part}---\n\n'

|

header_content += f'---{part}---\n\n'

|

||||||

|

|

||||||

module_name = module_path.replace('.__init__', '')

|

module_name = module_path.replace('.__init__', '')

|

||||||

module_path = module_path.replace(".", "/")

|

module_path = module_path.replace('.', '/')

|

||||||

url = f'https://github.com/ultralytics/ultralytics/blob/main/{module_path}.py'

|

url = f'https://github.com/ultralytics/ultralytics/blob/main/{module_path}.py'

|

||||||

title_content = (f'# Reference for `{module_path}.py`\n\n'

|

title_content = (

|

||||||

f'!!! note\n\n'

|

f'# Reference for `{module_path}.py`\n\n'

|

||||||

f' Full source code for this file is available at [{url}]({url}). Help us fix any issues you see by submitting a [Pull Request](https://docs.ultralytics.com/help/contributing/) 🛠️. Thank you 🙏!\n\n')

|

f'!!! note\n\n'

|

||||||

|

f' Full source code for this file is available at [{url}]({url}). Help us fix any issues you see by submitting a [Pull Request](https://docs.ultralytics.com/help/contributing/) 🛠️. Thank you 🙏!\n\n'

|

||||||

|

)

|

||||||

md_content = [f'---\n## ::: {module_name}.{class_name}\n<br><br>\n' for class_name in classes]

|

md_content = [f'---\n## ::: {module_name}.{class_name}\n<br><br>\n' for class_name in classes]

|

||||||

md_content.extend(f'---\n## ::: {module_name}.{func_name}\n<br><br>\n' for func_name in functions)

|

md_content.extend(f'---\n## ::: {module_name}.{func_name}\n<br><br>\n' for func_name in functions)

|

||||||

md_content = header_content + title_content + '\n'.join(md_content)

|

md_content = header_content + title_content + '\n'.join(md_content)

|

||||||

@ -80,7 +82,8 @@ def create_markdown(py_filepath: Path, module_path: str, classes: list, function

|

|||||||

|

|

||||||

|

|

||||||

def nested_dict():

|

def nested_dict():

|

||||||

"""Creates and returns a nested defaultdict.

|

"""

|

||||||

|

Creates and returns a nested defaultdict.

|

||||||

|

|

||||||

Returns:

|

Returns:

|

||||||

(defaultdict): A nested defaultdict object.

|

(defaultdict): A nested defaultdict object.

|

||||||

@ -89,7 +92,8 @@ def nested_dict():

|

|||||||

|

|

||||||

|

|

||||||

def sort_nested_dict(d: dict):

|

def sort_nested_dict(d: dict):

|

||||||

"""Sorts a nested dictionary recursively.

|

"""

|

||||||

|

Sorts a nested dictionary recursively.

|

||||||

|

|

||||||

Args:

|

Args:

|

||||||

d (dict): The dictionary to sort.

|

d (dict): The dictionary to sort.

|

||||||

@ -97,14 +101,12 @@ def sort_nested_dict(d: dict):

|

|||||||

Returns:

|

Returns:

|

||||||

(dict): The sorted dictionary.

|

(dict): The sorted dictionary.

|

||||||

"""

|

"""

|

||||||

return {

|

return {key: sort_nested_dict(value) if isinstance(value, dict) else value for key, value in sorted(d.items())}

|

||||||

key: sort_nested_dict(value) if isinstance(value, dict) else value

|

|

||||||

for key, value in sorted(d.items())

|

|

||||||

}

|

|

||||||

|

|

||||||

|

|

||||||

def create_nav_menu_yaml(nav_items: list):

|

def create_nav_menu_yaml(nav_items: list):

|

||||||

"""Creates a YAML file for the navigation menu based on the provided list of items.

|

"""

|

||||||

|

Creates a YAML file for the navigation menu based on the provided list of items.

|

||||||

|

|

||||||

Args:

|

Args:

|

||||||

nav_items (list): A list of relative file paths to Markdown files for the navigation menu.

|

nav_items (list): A list of relative file paths to Markdown files for the navigation menu.

|

||||||

|

|||||||

@ -8,11 +8,7 @@ keywords: Ultralytics, COCO8 dataset, object detection, model testing, dataset c

|

|||||||

|

|

||||||

## Introduction

|

## Introduction

|

||||||

|

|

||||||

[Ultralytics](https://ultralytics.com) COCO8 is a small, but versatile object detection dataset composed of the first 8

|

[Ultralytics](https://ultralytics.com) COCO8 is a small, but versatile object detection dataset composed of the first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training larger datasets.

|

||||||

images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and debugging

|

|

||||||

object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough to be

|

|

||||||

easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

|

|

||||||

larger datasets.

|

|

||||||

|

|

||||||

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

||||||

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

||||||

|

|||||||

@ -8,11 +8,7 @@ keywords: Ultralytics, YOLOv8, pose detection, COCO8-Pose dataset, dataset, mode

|

|||||||

|

|

||||||

## Introduction

|

## Introduction

|

||||||

|

|

||||||

[Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first

|

[Ultralytics](https://ultralytics.com) COCO8-Pose is a small, but versatile pose detection dataset composed of the first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training larger datasets.

|

||||||

8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

|

|

||||||

debugging object detection models, or for experimenting with new detection approaches. With 8 images, it is small enough

|

|

||||||

to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before

|

|

||||||

training larger datasets.

|

|

||||||

|

|

||||||

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

||||||

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

||||||

|

|||||||

@ -34,7 +34,7 @@ Format with Dim = 3

|

|||||||

<class-index> <x> <y> <width> <height> <px1> <py1> <p1-visibility> <px2> <py2> <p2-visibility> <pxn> <pyn> <p2-visibility>

|

<class-index> <x> <y> <width> <height> <px1> <py1> <p1-visibility> <px2> <py2> <p2-visibility> <pxn> <pyn> <p2-visibility>

|

||||||

```

|

```

|

||||||

|

|

||||||

In this format, `<class-index>` is the index of the class for the object,`<x> <y> <width> <height>` are coordinates of boudning box, and `<px1> <py1> <px2> <py2> ... <pxn> <pyn>` are the pixel coordinates of the keypoints. The coordinates are separated by spaces.

|

In this format, `<class-index>` is the index of the class for the object,`<x> <y> <width> <height>` are coordinates of bounding box, and `<px1> <py1> <px2> <py2> ... <pxn> <pyn>` are the pixel coordinates of the keypoints. The coordinates are separated by spaces.

|

||||||

|

|

||||||

### Dataset YAML format

|

### Dataset YAML format

|

||||||

|

|

||||||

|

|||||||

@ -8,11 +8,7 @@ keywords: COCO8-Seg dataset, Ultralytics, YOLOv8, instance segmentation, dataset

|

|||||||

|

|

||||||

## Introduction

|

## Introduction

|

||||||

|

|

||||||

[Ultralytics](https://ultralytics.com) COCO8-Seg is a small, but versatile instance segmentation dataset composed of the

|

[Ultralytics](https://ultralytics.com) COCO8-Seg is a small, but versatile instance segmentation dataset composed of the first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and debugging segmentation models, or for experimenting with new detection approaches. With 8 images, it is small enough to be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training larger datasets.

|

||||||

first 8 images of the COCO train 2017 set, 4 for training and 4 for validation. This dataset is ideal for testing and

|

|

||||||

debugging segmentation models, or for experimenting with new detection approaches. With 8 images, it is small enough to

|

|

||||||

be easily manageable, yet diverse enough to test training pipelines for errors and act as a sanity check before training

|

|

||||||

larger datasets.

|

|

||||||

|

|

||||||

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

This dataset is intended for use with Ultralytics [HUB](https://hub.ultralytics.com)

|

||||||

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

and [YOLOv8](https://github.com/ultralytics/ultralytics).

|

||||||

|

|||||||

@ -8,8 +8,7 @@ keywords: Ultralytics, YOLO, multi-object tracking, datasets, detection, segment

|

|||||||

|

|

||||||

## Dataset Format (Coming Soon)

|

## Dataset Format (Coming Soon)

|

||||||

|

|

||||||

Multi-Object Detector doesn't need standalone training and directly supports pre-trained detection, segmentation or Pose models.

|

Multi-Object Detector doesn't need standalone training and directly supports pre-trained detection, segmentation or Pose models. Support for training trackers alone is coming soon

|

||||||

Support for training trackers alone is coming soon

|

|

||||||

|

|

||||||

## Usage

|

## Usage

|

||||||

|

|

||||||

|

|||||||

@ -50,8 +50,8 @@ By integrating with Codecov, we aim to maintain and improve the quality of our c

|

|||||||

|

|

||||||

To quickly get a glimpse of the code coverage status of the `ultralytics` python package, we have included a badge and and sunburst visual of the `ultralytics` coverage results. These images show the percentage of code covered by our tests, offering an at-a-glance metric of our testing efforts. For full details please see https://codecov.io/github/ultralytics/ultralytics.

|

To quickly get a glimpse of the code coverage status of the `ultralytics` python package, we have included a badge and and sunburst visual of the `ultralytics` coverage results. These images show the percentage of code covered by our tests, offering an at-a-glance metric of our testing efforts. For full details please see https://codecov.io/github/ultralytics/ultralytics.

|

||||||

|

|

||||||

| Repository | Code Coverage |

|

| Repository | Code Coverage |

|

||||||

|-----------------------------------------------------------|----------------------------------------------------------------------|

|

|-----------------------------------------------------------|---------------------------------------------------------------------------------------------------------------------------------------------------------|

|

||||||

| [ultralytics](https://github.com/ultralytics/ultralytics) | [](https://codecov.io/gh/ultralytics/ultralytics) |

|

| [ultralytics](https://github.com/ultralytics/ultralytics) | [](https://codecov.io/gh/ultralytics/ultralytics) |

|

||||||

|

|

||||||

In the sunburst graphic below, the inner-most circle is the entire project, moving away from the center are folders then, finally, a single file. The size and color of each slice is representing the number of statements and the coverage, respectively.

|

In the sunburst graphic below, the inner-most circle is the entire project, moving away from the center are folders then, finally, a single file. The size and color of each slice is representing the number of statements and the coverage, respectively.

|

||||||

@ -59,4 +59,3 @@ In the sunburst graphic below, the inner-most circle is the entire project, movi

|

|||||||

<a href="https://codecov.io/github/ultralytics/ultralytics">

|

<a href="https://codecov.io/github/ultralytics/ultralytics">

|

||||||

<img src="https://codecov.io/gh/ultralytics/ultralytics/branch/main/graphs/sunburst.svg?token=HHW7IIVFVY" alt="Ultralytics Codecov Image">

|

<img src="https://codecov.io/gh/ultralytics/ultralytics/branch/main/graphs/sunburst.svg?token=HHW7IIVFVY" alt="Ultralytics Codecov Image">

|

||||||

</a>

|

</a>

|

||||||

|

|

||||||

|

|||||||

@ -5,66 +5,27 @@ keywords: Ultralytics, Contributor License Agreement, Open Source Software, Cont

|

|||||||

|

|

||||||

# Ultralytics Individual Contributor License Agreement

|

# Ultralytics Individual Contributor License Agreement

|

||||||

|

|

||||||

Thank you for your interest in contributing to open source software projects (“Projects”) made available by Ultralytics

|

Thank you for your interest in contributing to open source software projects (“Projects”) made available by Ultralytics SE or its affiliates (“Ultralytics”). This Individual Contributor License Agreement (“Agreement”) sets out the terms governing any source code, object code, bug fixes, configuration changes, tools, specifications, documentation, data, materials, feedback, information or other works of authorship that you submit or have submitted, in any form and in any manner, to Ultralytics in respect of any of the Projects (collectively “Contributions”). If you have any questions respecting this Agreement, please contact hello@ultralytics.com.

|

||||||

SE or its affiliates (“Ultralytics”). This Individual Contributor License Agreement (“Agreement”) sets out the terms

|

|

||||||

governing any source code, object code, bug fixes, configuration changes, tools, specifications, documentation, data,

|

|

||||||

materials, feedback, information or other works of authorship that you submit or have submitted, in any form and in any

|

|

||||||

manner, to Ultralytics in respect of any of the Projects (collectively “Contributions”). If you have any questions

|

|

||||||

respecting this Agreement, please contact hello@ultralytics.com.

|

|

||||||

|

|

||||||

You agree that the following terms apply to all of your past, present and future Contributions. Except for the licenses

|

You agree that the following terms apply to all of your past, present and future Contributions. Except for the licenses granted in this Agreement, you retain all of your right, title and interest in and to your Contributions.

|

||||||

granted in this Agreement, you retain all of your right, title and interest in and to your Contributions.

|

|

||||||

|

|

||||||

**Copyright License.** You hereby grant, and agree to grant, to Ultralytics a non-exclusive, perpetual, irrevocable,

|

**Copyright License.** You hereby grant, and agree to grant, to Ultralytics a non-exclusive, perpetual, irrevocable, worldwide, fully-paid, royalty-free, transferable copyright license to reproduce, prepare derivative works of, publicly display, publicly perform, and distribute your Contributions and such derivative works, with the right to sublicense the foregoing rights through multiple tiers of sublicensees.

|

||||||

worldwide, fully-paid, royalty-free, transferable copyright license to reproduce, prepare derivative works of, publicly

|

|

||||||

display, publicly perform, and distribute your Contributions and such derivative works, with the right to sublicense the

|

|

||||||

foregoing rights through multiple tiers of sublicensees.

|

|

||||||

|

|

||||||

**Patent License.** You hereby grant, and agree to grant, to Ultralytics a non-exclusive, perpetual, irrevocable,

|

**Patent License.** You hereby grant, and agree to grant, to Ultralytics a non-exclusive, perpetual, irrevocable, worldwide, fully-paid, royalty-free, transferable patent license to make, have made, use, offer to sell, sell, import, and otherwise transfer your Contributions, where such license applies only to those patent claims licensable by you that are necessarily infringed by your Contributions alone or by combination of your Contributions with the Project to which such Contributions were submitted, with the right to sublicense the foregoing rights through multiple tiers of sublicensees.

|

||||||

worldwide, fully-paid, royalty-free, transferable patent license to make, have made, use, offer to sell, sell,

|

|

||||||

import, and otherwise transfer your Contributions, where such license applies only to those patent claims

|

|

||||||

licensable by you that are necessarily infringed by your Contributions alone or by combination of your

|

|

||||||

Contributions with the Project to which such Contributions were submitted, with the right to sublicense the

|

|

||||||

foregoing rights through multiple tiers of sublicensees.

|

|

||||||

|

|

||||||

**Moral Rights.** To the fullest extent permitted under applicable law, you hereby waive, and agree not to

|

**Moral Rights.** To the fullest extent permitted under applicable law, you hereby waive, and agree not to assert, all of your “moral rights” in or relating to your Contributions for the benefit of Ultralytics, its assigns, and their respective direct and indirect sublicensees.

|

||||||

assert, all of your “moral rights” in or relating to your Contributions for the benefit of Ultralytics, its assigns, and

|

|

||||||

their respective direct and indirect sublicensees.

|

|

||||||

|

|

||||||

**Third Party Content/Rights.** If your Contribution includes or is based on any source code, object code, bug

|

**Third Party Content/Rights.

|

||||||

fixes, configuration changes, tools, specifications, documentation, data, materials, feedback, information or

|

** If your Contribution includes or is based on any source code, object code, bug fixes, configuration changes, tools, specifications, documentation, data, materials, feedback, information or other works of authorship that were not authored by you (“Third Party Content”) or if you are aware of any third party intellectual property or proprietary rights associated with your Contribution (“Third Party Rights”), then you agree to include with the submission of your Contribution full details respecting such Third Party Content and Third Party Rights, including, without limitation, identification of which aspects of your Contribution contain Third Party Content or are associated with Third Party Rights, the owner/author of the Third Party Content and Third Party Rights, where you obtained the Third Party Content, and any applicable third party license terms or restrictions respecting the Third Party Content and Third Party Rights. For greater certainty, the foregoing obligations respecting the identification of Third Party Content and Third Party Rights do not apply to any portion of a Project that is incorporated into your Contribution to that same Project.

|

||||||

other works of authorship that were not authored by you (“Third Party Content”) or if you are aware of any

|

|

||||||

third party intellectual property or proprietary rights associated with your Contribution (“Third Party Rights”),

|

|

||||||

then you agree to include with the submission of your Contribution full details respecting such Third Party

|

|

||||||

Content and Third Party Rights, including, without limitation, identification of which aspects of your

|

|

||||||

Contribution contain Third Party Content or are associated with Third Party Rights, the owner/author of the

|

|

||||||

Third Party Content and Third Party Rights, where you obtained the Third Party Content, and any applicable

|

|

||||||

third party license terms or restrictions respecting the Third Party Content and Third Party Rights. For greater

|

|

||||||

certainty, the foregoing obligations respecting the identification of Third Party Content and Third Party Rights

|

|

||||||

do not apply to any portion of a Project that is incorporated into your Contribution to that same Project.

|

|

||||||

|

|

||||||

**Representations.** You represent that, other than the Third Party Content and Third Party Rights identified by

|

**Representations.** You represent that, other than the Third Party Content and Third Party Rights identified by you in accordance with this Agreement, you are the sole author of your Contributions and are legally entitled to grant the foregoing licenses and waivers in respect of your Contributions. If your Contributions were created in the course of your employment with your past or present employer(s), you represent that such employer(s) has authorized you to make your Contributions on behalf of such employer(s) or such employer

|

||||||

you in accordance with this Agreement, you are the sole author of your Contributions and are legally entitled

|

|

||||||

to grant the foregoing licenses and waivers in respect of your Contributions. If your Contributions were

|

|

||||||

created in the course of your employment with your past or present employer(s), you represent that such

|

|

||||||

employer(s) has authorized you to make your Contributions on behalf of such employer(s) or such employer

|

|

||||||

(s) has waived all of their right, title or interest in or to your Contributions.

|

(s) has waived all of their right, title or interest in or to your Contributions.

|

||||||

|

|

||||||

**Disclaimer.** To the fullest extent permitted under applicable law, your Contributions are provided on an "asis"

|

**Disclaimer.** To the fullest extent permitted under applicable law, your Contributions are provided on an "asis"

|

||||||

basis, without any warranties or conditions, express or implied, including, without limitation, any implied

|

basis, without any warranties or conditions, express or implied, including, without limitation, any implied warranties or conditions of non-infringement, merchantability or fitness for a particular purpose. You are not required to provide support for your Contributions, except to the extent you desire to provide support.

|

||||||

warranties or conditions of non-infringement, merchantability or fitness for a particular purpose. You are not

|

|

||||||

required to provide support for your Contributions, except to the extent you desire to provide support.

|

|

||||||

|

|

||||||

**No Obligation.** You acknowledge that Ultralytics is under no obligation to use or incorporate your Contributions

|

**No Obligation.** You acknowledge that Ultralytics is under no obligation to use or incorporate your Contributions into any of the Projects. The decision to use or incorporate your Contributions into any of the Projects will be made at the sole discretion of Ultralytics or its authorized delegates ..

|

||||||

into any of the Projects. The decision to use or incorporate your Contributions into any of the Projects will be

|

|

||||||

made at the sole discretion of Ultralytics or its authorized delegates ..

|

|

||||||

|

|

||||||

**Disputes.** This Agreement shall be governed by and construed in accordance with the laws of the State of

|

**Disputes.** This Agreement shall be governed by and construed in accordance with the laws of the State of New York, United States of America, without giving effect to its principles or rules regarding conflicts of laws, other than such principles directing application of New York law. The parties hereby submit to venue in, and jurisdiction of the courts located in New York, New York for purposes relating to this Agreement. In the event that any of the provisions of this Agreement shall be held by a court or other tribunal of competent jurisdiction to be unenforceable, the remaining portions hereof shall remain in full force and effect.

|

||||||

New York, United States of America, without giving effect to its principles or rules regarding conflicts of laws,

|

|

||||||

other than such principles directing application of New York law. The parties hereby submit to venue in, and

|

|

||||||

jurisdiction of the courts located in New York, New York for purposes relating to this Agreement. In the event

|

|

||||||

that any of the provisions of this Agreement shall be held by a court or other tribunal of competent jurisdiction

|

|

||||||

to be unenforceable, the remaining portions hereof shall remain in full force and effect.

|

|

||||||

|

|

||||||

**Assignment.** You agree that Ultralytics may assign this Agreement, and all of its rights, obligations and licenses

|

**Assignment.** You agree that Ultralytics may assign this Agreement, and all of its rights, obligations and licenses hereunder.

|

||||||

hereunder.

|

|

||||||

|

|||||||

@ -8,124 +8,78 @@ keywords: Ultralytics, code of conduct, community, contribution, behavior guidel

|

|||||||

|

|

||||||

## Our Pledge

|

## Our Pledge

|

||||||

|

|

||||||

We as members, contributors, and leaders pledge to make participation in our

|

We as members, contributors, and leaders pledge to make participation in our community a harassment-free experience for everyone, regardless of age, body size, visible or invisible disability, ethnicity, sex characteristics, gender identity and expression, level of experience, education, socio-economic status, nationality, personal appearance, race, religion, or sexual identity and orientation.

|

||||||

community a harassment-free experience for everyone, regardless of age, body

|

|

||||||

size, visible or invisible disability, ethnicity, sex characteristics, gender

|

|

||||||

identity and expression, level of experience, education, socio-economic status,

|

|

||||||

nationality, personal appearance, race, religion, or sexual identity

|

|

||||||

and orientation.

|

|

||||||

|

|

||||||

We pledge to act and interact in ways that contribute to an open, welcoming,

|

We pledge to act and interact in ways that contribute to an open, welcoming, diverse, inclusive, and healthy community.

|

||||||

diverse, inclusive, and healthy community.

|

|

||||||

|

|

||||||

## Our Standards

|

## Our Standards

|

||||||

|

|

||||||

Examples of behavior that contributes to a positive environment for our

|

Examples of behavior that contributes to a positive environment for our community include:

|

||||||

community include:

|

|

||||||

|

|

||||||

- Demonstrating empathy and kindness toward other people

|

- Demonstrating empathy and kindness toward other people

|

||||||

- Being respectful of differing opinions, viewpoints, and experiences

|

- Being respectful of differing opinions, viewpoints, and experiences

|

||||||

- Giving and gracefully accepting constructive feedback

|

- Giving and gracefully accepting constructive feedback

|

||||||

- Accepting responsibility and apologizing to those affected by our mistakes,

|

- Accepting responsibility and apologizing to those affected by our mistakes, and learning from the experience

|

||||||

and learning from the experience

|

- Focusing on what is best not just for us as individuals, but for the overall community

|

||||||

- Focusing on what is best not just for us as individuals, but for the

|

|

||||||

overall community

|

|

||||||

|

|

||||||

Examples of unacceptable behavior include:

|

Examples of unacceptable behavior include:

|

||||||

|

|

||||||

- The use of sexualized language or imagery, and sexual attention or

|

- The use of sexualized language or imagery, and sexual attention or advances of any kind

|

||||||

advances of any kind

|

|

||||||

- Trolling, insulting or derogatory comments, and personal or political attacks

|

- Trolling, insulting or derogatory comments, and personal or political attacks

|

||||||

- Public or private harassment

|

- Public or private harassment

|

||||||

- Publishing others' private information, such as a physical or email

|

- Publishing others' private information, such as a physical or email address, without their explicit permission

|

||||||

address, without their explicit permission

|

- Other conduct which could reasonably be considered inappropriate in a professional setting

|

||||||

- Other conduct which could reasonably be considered inappropriate in a

|

|

||||||

professional setting

|

|

||||||

|

|

||||||

## Enforcement Responsibilities

|

## Enforcement Responsibilities

|

||||||

|

|

||||||

Community leaders are responsible for clarifying and enforcing our standards of

|

Community leaders are responsible for clarifying and enforcing our standards of acceptable behavior and will take appropriate and fair corrective action in response to any behavior that they deem inappropriate, threatening, offensive, or harmful.

|

||||||

acceptable behavior and will take appropriate and fair corrective action in

|

|

||||||

response to any behavior that they deem inappropriate, threatening, offensive,

|

|

||||||

or harmful.

|

|

||||||

|

|

||||||

Community leaders have the right and responsibility to remove, edit, or reject

|

Community leaders have the right and responsibility to remove, edit, or reject comments, commits, code, wiki edits, issues, and other contributions that are not aligned to this Code of Conduct, and will communicate reasons for moderation decisions when appropriate.

|

||||||

comments, commits, code, wiki edits, issues, and other contributions that are

|

|

||||||

not aligned to this Code of Conduct, and will communicate reasons for moderation

|

|

||||||

decisions when appropriate.

|

|

||||||

|

|

||||||

## Scope

|

## Scope

|

||||||

|

|

||||||

This Code of Conduct applies within all community spaces, and also applies when

|

This Code of Conduct applies within all community spaces, and also applies when an individual is officially representing the community in public spaces. Examples of representing our community include using an official e-mail address, posting via an official social media account, or acting as an appointed representative at an online or offline event.

|

||||||

an individual is officially representing the community in public spaces.

|

|

||||||

Examples of representing our community include using an official e-mail address,

|

|

||||||

posting via an official social media account, or acting as an appointed

|

|

||||||

representative at an online or offline event.

|

|

||||||

|

|

||||||

## Enforcement

|

## Enforcement

|

||||||

|

|

||||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be

|

Instances of abusive, harassing, or otherwise unacceptable behavior may be reported to the community leaders responsible for enforcement at hello@ultralytics.com. All complaints will be reviewed and investigated promptly and fairly.

|

||||||

reported to the community leaders responsible for enforcement at

|

|

||||||

hello@ultralytics.com.

|

|

||||||

All complaints will be reviewed and investigated promptly and fairly.

|

|

||||||

|

|

||||||

All community leaders are obligated to respect the privacy and security of the

|

All community leaders are obligated to respect the privacy and security of the reporter of any incident.

|

||||||

reporter of any incident.

|

|

||||||

|

|

||||||

## Enforcement Guidelines

|

## Enforcement Guidelines

|

||||||

|

|

||||||

Community leaders will follow these Community Impact Guidelines in determining

|

Community leaders will follow these Community Impact Guidelines in determining the consequences for any action they deem in violation of this Code of Conduct:

|

||||||

the consequences for any action they deem in violation of this Code of Conduct:

|

|

||||||

|

|

||||||

### 1. Correction

|

### 1. Correction

|

||||||

|

|

||||||

**Community Impact**: Use of inappropriate language or other behavior deemed

|

**Community Impact**: Use of inappropriate language or other behavior deemed unprofessional or unwelcome in the community.

|

||||||

unprofessional or unwelcome in the community.

|

|

||||||

|

|

||||||

**Consequence**: A private, written warning from community leaders, providing

|

**Consequence**: A private, written warning from community leaders, providing clarity around the nature of the violation and an explanation of why the behavior was inappropriate. A public apology may be requested.

|

||||||

clarity around the nature of the violation and an explanation of why the

|

|

||||||

behavior was inappropriate. A public apology may be requested.

|

|

||||||

|

|

||||||

### 2. Warning

|

### 2. Warning

|

||||||

|

|

||||||

**Community Impact**: A violation through a single incident or series

|

**Community Impact**: A violation through a single incident or series of actions.

|

||||||

of actions.

|

|

||||||

|

|

||||||

**Consequence**: A warning with consequences for continued behavior. No

|

**Consequence**: A warning with consequences for continued behavior. No interaction with the people involved, including unsolicited interaction with those enforcing the Code of Conduct, for a specified period of time. This includes avoiding interactions in community spaces as well as external channels like social media. Violating these terms may lead to a temporary or permanent ban.

|

||||||

interaction with the people involved, including unsolicited interaction with

|

|

||||||

those enforcing the Code of Conduct, for a specified period of time. This

|

|

||||||

includes avoiding interactions in community spaces as well as external channels

|

|

||||||

like social media. Violating these terms may lead to a temporary or

|

|

||||||

permanent ban.

|

|

||||||

|

|

||||||

### 3. Temporary Ban

|

### 3. Temporary Ban

|

||||||

|

|

||||||

**Community Impact**: A serious violation of community standards, including

|

**Community Impact**: A serious violation of community standards, including sustained inappropriate behavior.

|

||||||

sustained inappropriate behavior.

|

|

||||||

|

|

||||||

**Consequence**: A temporary ban from any sort of interaction or public

|

**Consequence**: A temporary ban from any sort of interaction or public communication with the community for a specified period of time. No public or private interaction with the people involved, including unsolicited interaction with those enforcing the Code of Conduct, is allowed during this period. Violating these terms may lead to a permanent ban.

|

||||||

communication with the community for a specified period of time. No public or

|

|

||||||

private interaction with the people involved, including unsolicited interaction

|

|

||||||

with those enforcing the Code of Conduct, is allowed during this period.

|

|

||||||

Violating these terms may lead to a permanent ban.

|

|

||||||

|

|

||||||

### 4. Permanent Ban

|

### 4. Permanent Ban

|

||||||

|

|

||||||

**Community Impact**: Demonstrating a pattern of violation of community

|

**Community Impact**: Demonstrating a pattern of violation of community standards, including sustained inappropriate behavior, harassment of an individual, or aggression toward or disparagement of classes of individuals.

|

||||||

standards, including sustained inappropriate behavior, harassment of an

|

|

||||||

individual, or aggression toward or disparagement of classes of individuals.

|

|

||||||

|

|

||||||

**Consequence**: A permanent ban from any sort of public interaction within

|

**Consequence**: A permanent ban from any sort of public interaction within the community.

|

||||||

the community.

|

|

||||||

|

|

||||||

## Attribution

|

## Attribution

|

||||||

|

|

||||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage],

|

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 2.0, available at

|

||||||

version 2.0, available at

|

|

||||||

https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

|

https://www.contributor-covenant.org/version/2/0/code_of_conduct.html.

|

||||||

|

|

||||||

Community Impact Guidelines were inspired by [Mozilla's code of conduct

|

Community Impact Guidelines were inspired by [Mozilla's code of conduct enforcement ladder](https://github.com/mozilla/diversity).

|

||||||

enforcement ladder](https://github.com/mozilla/diversity).

|

|

||||||

|

|

||||||

For answers to common questions about this code of conduct, see the FAQ at

|

For answers to common questions about this code of conduct, see the FAQ at

|

||||||

https://www.contributor-covenant.org/faq. Translations are available at

|

https://www.contributor-covenant.org/faq. Translations are available at

|

||||||

|

|||||||

@ -152,7 +152,6 @@ This comparison shows the order-of-magnitude differences in the model sizes and

|

|||||||

|

|

||||||

Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

|

Tests run on a 2023 Apple M2 Macbook with 16GB of RAM. To reproduce this test:

|

||||||

|

|

||||||

|

|

||||||

!!! example ""

|

!!! example ""

|

||||||

|

|

||||||

=== "Python"

|

=== "Python"

|

||||||

|

|||||||

@ -12,8 +12,7 @@ keywords: Meituan YOLOv6, object detection, Ultralytics, YOLOv6 docs, Bi-directi

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

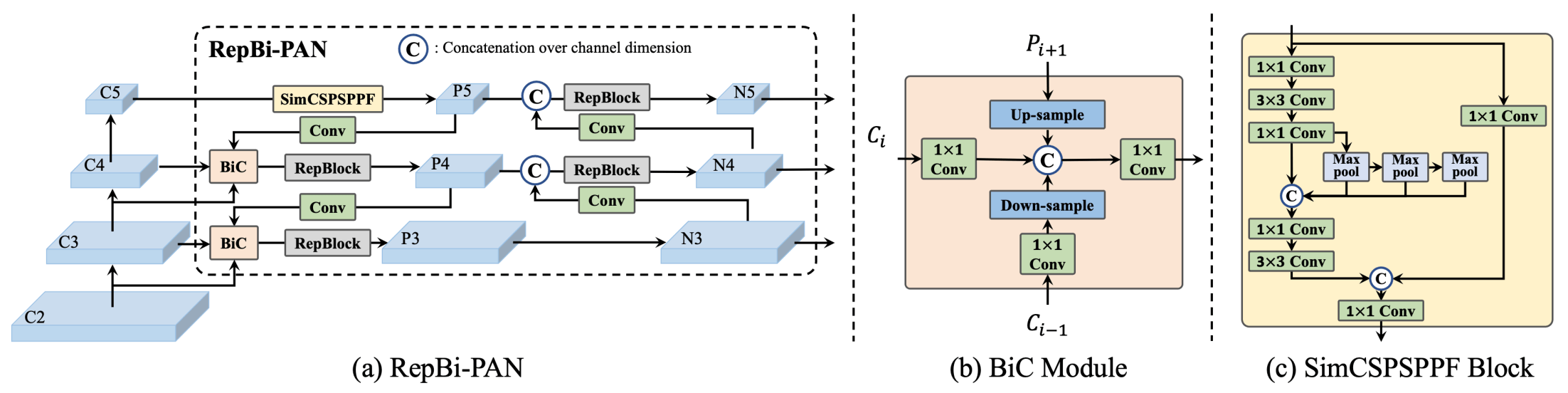

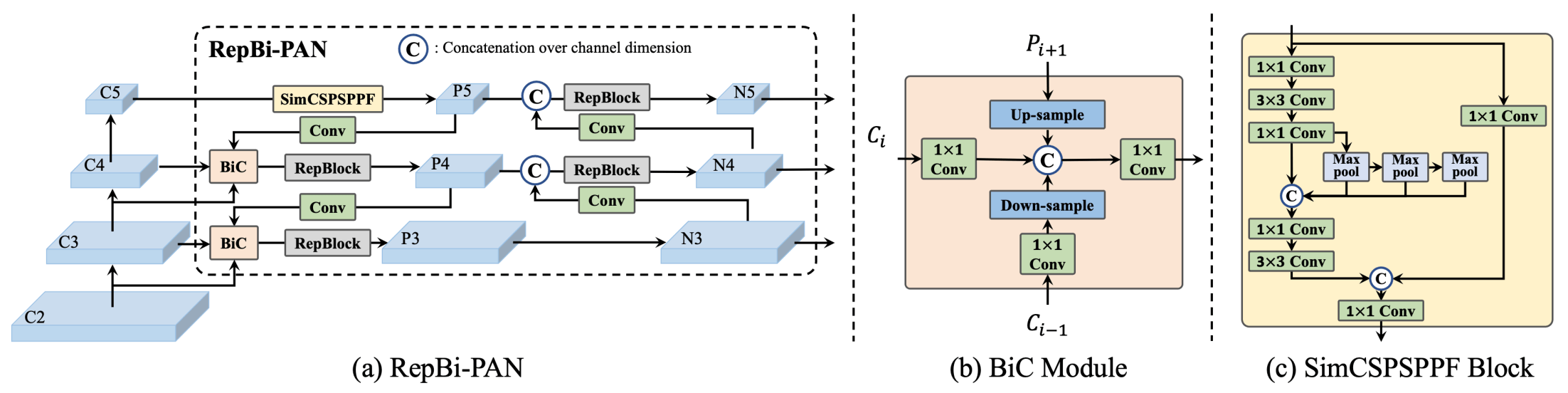

**Overview of YOLOv6.** Model architecture diagram showing the redesigned network components and training strategies that have led to significant performance improvements. (a) The neck of YOLOv6 (N and S are shown). Note for M/L, RepBlocks is replaced with CSPStackRep. (b) The

|

**Overview of YOLOv6.** Model architecture diagram showing the redesigned network components and training strategies that have led to significant performance improvements. (a) The neck of YOLOv6 (N and S are shown). Note for M/L, RepBlocks is replaced with CSPStackRep. (b) The structure of a BiC module. (c) A SimCSPSPPF block. ([source](https://arxiv.org/pdf/2301.05586.pdf)).

|

||||||

structure of a BiC module. (c) A SimCSPSPPF block. ([source](https://arxiv.org/pdf/2301.05586.pdf)).

|

|

||||||

|

|

||||||

### Key Features

|

### Key Features

|

||||||

|

|

||||||

|

|||||||

@ -20,7 +20,7 @@ Ultralytics provides various installation methods including pip, conda, and Dock

|

|||||||

pip install ultralytics

|

pip install ultralytics

|

||||||

```

|

```

|

||||||

|

|

||||||

You can also install the `ultralytics` package directly from the GitHub [repository](https://github.com/ultralytics/ultralytics). This might be useful if you want the latest development version. Make sure to have the Git command-line tool installed on your system. The `@main` command installs the `main` branch and may be modified to another branch, i.e. `@my-branch`, or removed alltogether to default to `main` branch.

|

You can also install the `ultralytics` package directly from the GitHub [repository](https://github.com/ultralytics/ultralytics). This might be useful if you want the latest development version. Make sure to have the Git command-line tool installed on your system. The `@main` command installs the `main` branch and may be modified to another branch, i.e. `@my-branch`, or removed entirely to default to `main` branch.

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

# Install the ultralytics package from GitHub

|

# Install the ultralytics package from GitHub

|

||||||

|

|||||||

@ -37,4 +37,3 @@ div.highlight {

|

|||||||

max-height: 20rem;

|

max-height: 20rem;

|

||||||

overflow-y: auto; /* for adding a scrollbar when needed */

|

overflow-y: auto; /* for adding a scrollbar when needed */

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|||||||

@ -6,9 +6,7 @@ keywords: Ultralytics, YOLO, callbacks guide, training callback, validation call

|

|||||||

|

|

||||||

## Callbacks

|

## Callbacks

|

||||||

|

|

||||||

Ultralytics framework supports callbacks as entry points in strategic stages of train, val, export, and predict modes.

|

Ultralytics framework supports callbacks as entry points in strategic stages of train, val, export, and predict modes. Each callback accepts a `Trainer`, `Validator`, or `Predictor` object depending on the operation type. All properties of these objects can be found in Reference section of the docs.

|

||||||

Each callback accepts a `Trainer`, `Validator`, or `Predictor` object depending on the operation type. All properties of

|

|

||||||

these objects can be found in Reference section of the docs.

|

|

||||||

|

|

||||||

## Examples

|

## Examples

|

||||||

|

|

||||||

|

|||||||

@ -6,8 +6,7 @@ keywords: Ultralytics, YOLO, CLI, train, validation, prediction, command line in

|

|||||||

|

|

||||||

# Command Line Interface Usage

|

# Command Line Interface Usage

|

||||||

|

|

||||||

The YOLO command line interface (CLI) allows for simple single-line commands without the need for a Python environment.

|

The YOLO command line interface (CLI) allows for simple single-line commands without the need for a Python environment. CLI requires no customization or Python code. You can simply run all tasks from the terminal with the `yolo` command.

|

||||||

CLI requires no customization or Python code. You can simply run all tasks from the terminal with the `yolo` command.

|

|

||||||

|

|

||||||

!!! example

|

!!! example

|

||||||

|

|

||||||

@ -65,11 +64,9 @@ CLI requires no customization or Python code. You can simply run all tasks from

|

|||||||

|

|

||||||

Where:

|

Where:

|

||||||

|

|

||||||

- `TASK` (optional) is one of `[detect, segment, classify]`. If it is not passed explicitly YOLOv8 will try to guess

|

- `TASK` (optional) is one of `[detect, segment, classify]`. If it is not passed explicitly YOLOv8 will try to guess the `TASK` from the model type.

|

||||||

the `TASK` from the model type.

|

|

||||||

- `MODE` (required) is one of `[train, val, predict, export, track]`

|

- `MODE` (required) is one of `[train, val, predict, export, track]`

|

||||||

- `ARGS` (optional) are any number of custom `arg=value` pairs like `imgsz=320` that override defaults.

|

- `ARGS` (optional) are any number of custom `arg=value` pairs like `imgsz=320` that override defaults. For a full list of available `ARGS` see the [Configuration](cfg.md) page and `defaults.yaml`

|

||||||

For a full list of available `ARGS` see the [Configuration](cfg.md) page and `defaults.yaml`

|

|

||||||

GitHub [source](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/default.yaml).

|

GitHub [source](https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/default.yaml).

|

||||||

|

|

||||||

!!! warning "Warning"

|

!!! warning "Warning"

|

||||||

@ -82,8 +79,7 @@ Where:

|

|||||||

|

|

||||||

## Train

|

## Train

|

||||||

|

|

||||||

Train YOLOv8n on the COCO128 dataset for 100 epochs at image size 640. For a full list of available arguments see

|

Train YOLOv8n on the COCO128 dataset for 100 epochs at image size 640. For a full list of available arguments see the [Configuration](cfg.md) page.

|

||||||

the [Configuration](cfg.md) page.

|

|

||||||

|

|

||||||

!!! example "Example"

|

!!! example "Example"

|

||||||

|

|

||||||

@ -103,8 +99,7 @@ the [Configuration](cfg.md) page.

|

|||||||

|

|

||||||

## Val

|

## Val

|

||||||

|

|

||||||

Validate trained YOLOv8n model accuracy on the COCO128 dataset. No argument need to passed as the `model` retains it's

|

Validate trained YOLOv8n model accuracy on the COCO128 dataset. No argument need to passed as the `model` retains it's training `data` and arguments as model attributes.

|

||||||

training `data` and arguments as model attributes.

|

|

||||||

|

|

||||||

!!! example "Example"

|

!!! example "Example"

|

||||||

|

|

||||||

@ -162,8 +157,7 @@ Export a YOLOv8n model to a different format like ONNX, CoreML, etc.

|

|||||||

yolo export model=path/to/best.pt format=onnx

|

yolo export model=path/to/best.pt format=onnx

|

||||||

```

|

```

|

||||||

|

|

||||||

Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument,

|

Available YOLOv8 export formats are in the table below. You can export to any format using the `format` argument, i.e. `format='onnx'` or `format='engine'`.

|

||||||

i.e. `format='onnx'` or `format='engine'`.

|

|

||||||

|

|

||||||

| Format | `format` Argument | Model | Metadata | Arguments |

|

| Format | `format` Argument | Model | Metadata | Arguments |

|

||||||

|--------------------------------------------------------------------|-------------------|---------------------------|----------|-----------------------------------------------------|

|

|--------------------------------------------------------------------|-------------------|---------------------------|----------|-----------------------------------------------------|

|

||||||

@ -207,13 +201,11 @@ Default arguments can be overridden by simply passing them as arguments in the C

|

|||||||

|

|

||||||

## Overriding default config file

|

## Overriding default config file

|

||||||

|

|

||||||

You can override the `default.yaml` config file entirely by passing a new file with the `cfg` arguments,

|

You can override the `default.yaml` config file entirely by passing a new file with the `cfg` arguments, i.e. `cfg=custom.yaml`.

|

||||||

i.e. `cfg=custom.yaml`.

|

|

||||||

|

|

||||||

To do this first create a copy of `default.yaml` in your current working dir with the `yolo copy-cfg` command.

|

To do this first create a copy of `default.yaml` in your current working dir with the `yolo copy-cfg` command.

|

||||||

|

|

||||||

This will create `default_copy.yaml`, which you can then pass as `cfg=default_copy.yaml` along with any additional args,

|

This will create `default_copy.yaml`, which you can then pass as `cfg=default_copy.yaml` along with any additional args, like `imgsz=320` in this example:

|

||||||

like `imgsz=320` in this example:

|

|

||||||

|

|

||||||

!!! example ""

|

!!! example ""

|

||||||

|

|

||||||

|

|||||||

@ -4,18 +4,14 @@ description: Discover how to customize and extend base Ultralytics YOLO Trainer

|

|||||||

keywords: Ultralytics, YOLO, trainer engines, BaseTrainer, DetectionTrainer, customizing trainers, extending trainers, custom model, custom dataloader

|

keywords: Ultralytics, YOLO, trainer engines, BaseTrainer, DetectionTrainer, customizing trainers, extending trainers, custom model, custom dataloader

|

||||||

---

|

---

|

||||||

|

|

||||||

Both the Ultralytics YOLO command-line and python interfaces are simply a high-level abstraction on the base engine

|

Both the Ultralytics YOLO command-line and python interfaces are simply a high-level abstraction on the base engine executors. Let's take a look at the Trainer engine.

|

||||||

executors. Let's take a look at the Trainer engine.

|

|

||||||

|

|

||||||

## BaseTrainer

|

## BaseTrainer

|

||||||

|

|

||||||

BaseTrainer contains the generic boilerplate training routine. It can be customized for any task based over overriding

|

BaseTrainer contains the generic boilerplate training routine. It can be customized for any task based over overriding the required functions or operations as long the as correct formats are followed. For example, you can support your own custom model and dataloader by just overriding these functions:

|

||||||

the required functions or operations as long the as correct formats are followed. For example, you can support your own

|

|

||||||

custom model and dataloader by just overriding these functions:

|

|

||||||

|

|

||||||

* `get_model(cfg, weights)` - The function that builds the model to be trained

|

* `get_model(cfg, weights)` - The function that builds the model to be trained

|

||||||

* `get_dataloader()` - The function that builds the dataloader

|

* `get_dataloader()` - The function that builds the dataloader More details and source code can be found in [`BaseTrainer` Reference](../reference/engine/trainer.md)

|

||||||

More details and source code can be found in [`BaseTrainer` Reference](../reference/engine/trainer.md)

|

|

||||||

|

|

||||||

## DetectionTrainer

|

## DetectionTrainer

|

||||||

|

|

||||||

@ -31,8 +27,7 @@ trained_model = trainer.best # get best model

|

|||||||

|

|

||||||

### Customizing the DetectionTrainer

|

### Customizing the DetectionTrainer

|

||||||

|

|

||||||

Let's customize the trainer **to train a custom detection model** that is not supported directly. You can do this by

|

Let's customize the trainer **to train a custom detection model** that is not supported directly. You can do this by simply overloading the existing the `get_model` functionality:

|

||||||

simply overloading the existing the `get_model` functionality:

|

|

||||||

|

|

||||||

```python

|

```python

|

||||||

from ultralytics.models.yolo.detect import DetectionTrainer

|

from ultralytics.models.yolo.detect import DetectionTrainer

|

||||||

|

|||||||

@ -6,14 +6,9 @@ keywords: YOLOv8, Ultralytics, Python, object detection, segmentation, classific

|

|||||||

|

|

||||||

# Python Usage

|

# Python Usage

|

||||||

|

|

||||||

Welcome to the YOLOv8 Python Usage documentation! This guide is designed to help you seamlessly integrate YOLOv8 into

|

Welcome to the YOLOv8 Python Usage documentation! This guide is designed to help you seamlessly integrate YOLOv8 into your Python projects for object detection, segmentation, and classification. Here, you'll learn how to load and use pretrained models, train new models, and perform predictions on images. The easy-to-use Python interface is a valuable resource for anyone looking to incorporate YOLOv8 into their Python projects, allowing you to quickly implement advanced object detection capabilities. Let's get started!

|

||||||

your Python projects for object detection, segmentation, and classification. Here, you'll learn how to load and use

|

|

||||||

pretrained models, train new models, and perform predictions on images. The easy-to-use Python interface is a valuable

|

|

||||||

resource for anyone looking to incorporate YOLOv8 into their Python projects, allowing you to quickly implement advanced

|

|

||||||

object detection capabilities. Let's get started!

|

|

||||||

|

|

||||||

For example, users can load a model, train it, evaluate its performance on a validation set, and even export it to ONNX

|

For example, users can load a model, train it, evaluate its performance on a validation set, and even export it to ONNX format with just a few lines of code.

|

||||||

format with just a few lines of code.

|

|

||||||

|

|

||||||

!!! example "Python"

|

!!! example "Python"

|

||||||

|

|

||||||

@ -41,9 +36,7 @@ format with just a few lines of code.

|

|||||||

|

|

||||||

## [Train](../modes/train.md)

|

## [Train](../modes/train.md)

|

||||||

|

|

||||||

Train mode is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the

|

Train mode is used for training a YOLOv8 model on a custom dataset. In this mode, the model is trained using the specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can accurately predict the classes and locations of objects in an image.

|

||||||

specified dataset and hyperparameters. The training process involves optimizing the model's parameters so that it can

|

|

||||||

accurately predict the classes and locations of objects in an image.

|

|

||||||

|

|

||||||

!!! example "Train"

|

!!! example "Train"

|

||||||

|

|

||||||

@ -73,9 +66,7 @@ accurately predict the classes and locations of objects in an image.

|

|||||||

|

|

||||||

## [Val](../modes/val.md)

|

## [Val](../modes/val.md)

|

||||||

|

|

||||||

Val mode is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a

|

Val mode is used for validating a YOLOv8 model after it has been trained. In this mode, the model is evaluated on a validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters of the model to improve its performance.

|

||||||

validation set to measure its accuracy and generalization performance. This mode can be used to tune the hyperparameters

|

|

||||||

of the model to improve its performance.

|

|

||||||

|

|

||||||

!!! example "Val"

|

!!! example "Val"

|

||||||

|

|

||||||

@ -103,9 +94,7 @@ of the model to improve its performance.

|

|||||||

|

|

||||||

## [Predict](../modes/predict.md)

|

## [Predict](../modes/predict.md)

|

||||||

|

|

||||||

Predict mode is used for making predictions using a trained YOLOv8 model on new images or videos. In this mode, the

|