diff --git a/docs/build_docs.py b/docs/build_docs.py

index 8db3f4a7..1f0911b9 100644

--- a/docs/build_docs.py

+++ b/docs/build_docs.py

@@ -49,8 +49,9 @@ def build_docs(use_languages=False, clone_repos=True):

os.system(f"git clone {repo} {local_dir}")

os.system(f"git -C {local_dir} pull") # update repo

shutil.rmtree(DOCS / "en/hub/sdk", ignore_errors=True) # delete if exists

- shutil.copytree(local_dir / "docs", DOCS / "en/hub/sdk")

- shutil.rmtree(DOCS / "en/hub/sdk/reference") # temporarily delete reference until we find a solution for this

+ shutil.copytree(local_dir / "docs", DOCS / "en/hub/sdk") # for docs

+ shutil.rmtree(DOCS.parent / "hub_sdk", ignore_errors=True) # delete if exists

+ shutil.copytree(local_dir / "hub_sdk", DOCS.parent / "hub_sdk") # for mkdocstrings

print(f"Cloned/Updated {repo} in {local_dir}")

# Build the main documentation

@@ -68,7 +69,7 @@ def build_docs(use_languages=False, clone_repos=True):

def update_html_links():

"""Update href links in HTML files to remove '.md' and '/index.md', excluding links starting with 'https://'."""

- html_files = Path(SITE).rglob("*.html")

+ html_files = SITE.rglob("*.html")

total_updated_links = 0

for html_file in html_files:

@@ -134,6 +135,27 @@ def update_html_head(script=""):

file.write(new_html_content)

+def update_subdir_edit_links(subdir="", docs_url=""):

+ """Update the HTML head section of each file."""

+ from bs4 import BeautifulSoup

+

+ if str(subdir[0]) == "/":

+ subdir = str(subdir[0])[1:]

+ html_files = (SITE / subdir).rglob("*.html")

+ for html_file in tqdm(html_files, desc="Processing subdir files"):

+ with html_file.open("r", encoding="utf-8") as file:

+ soup = BeautifulSoup(file, "html.parser")

+

+ # Find the anchor tag and update its href attribute

+ a_tag = soup.find("a", {"class": "md-content__button md-icon"})

+ if a_tag and a_tag["title"] == "Edit this page":

+ a_tag["href"] = f"{docs_url}{a_tag['href'].split(subdir)[-1]}"

+

+ # Write the updated HTML back to the file

+ with open(html_file, "w", encoding="utf-8") as file:

+ file.write(str(soup))

+

+

def main():

# Build the docs

build_docs()

@@ -141,6 +163,12 @@ def main():

# Update titles

update_page_title(SITE / "404.html", new_title="Ultralytics Docs - Not Found")

+ # Update edit links

+ update_subdir_edit_links(

+ subdir="hub/sdk/", # do not use leading slash

+ docs_url="https://github.com/ultralytics/hub-sdk/tree/develop/docs/",

+ )

+

# Update HTML file head section

# update_html_head("")

diff --git a/docs/en/datasets/explorer/dashboard.md b/docs/en/datasets/explorer/dashboard.md

index 7686646b..85670d94 100644

--- a/docs/en/datasets/explorer/dashboard.md

+++ b/docs/en/datasets/explorer/dashboard.md

@@ -9,7 +9,7 @@ keywords: Ultralytics, Explorer GUI, semantic search, vector similarity search,

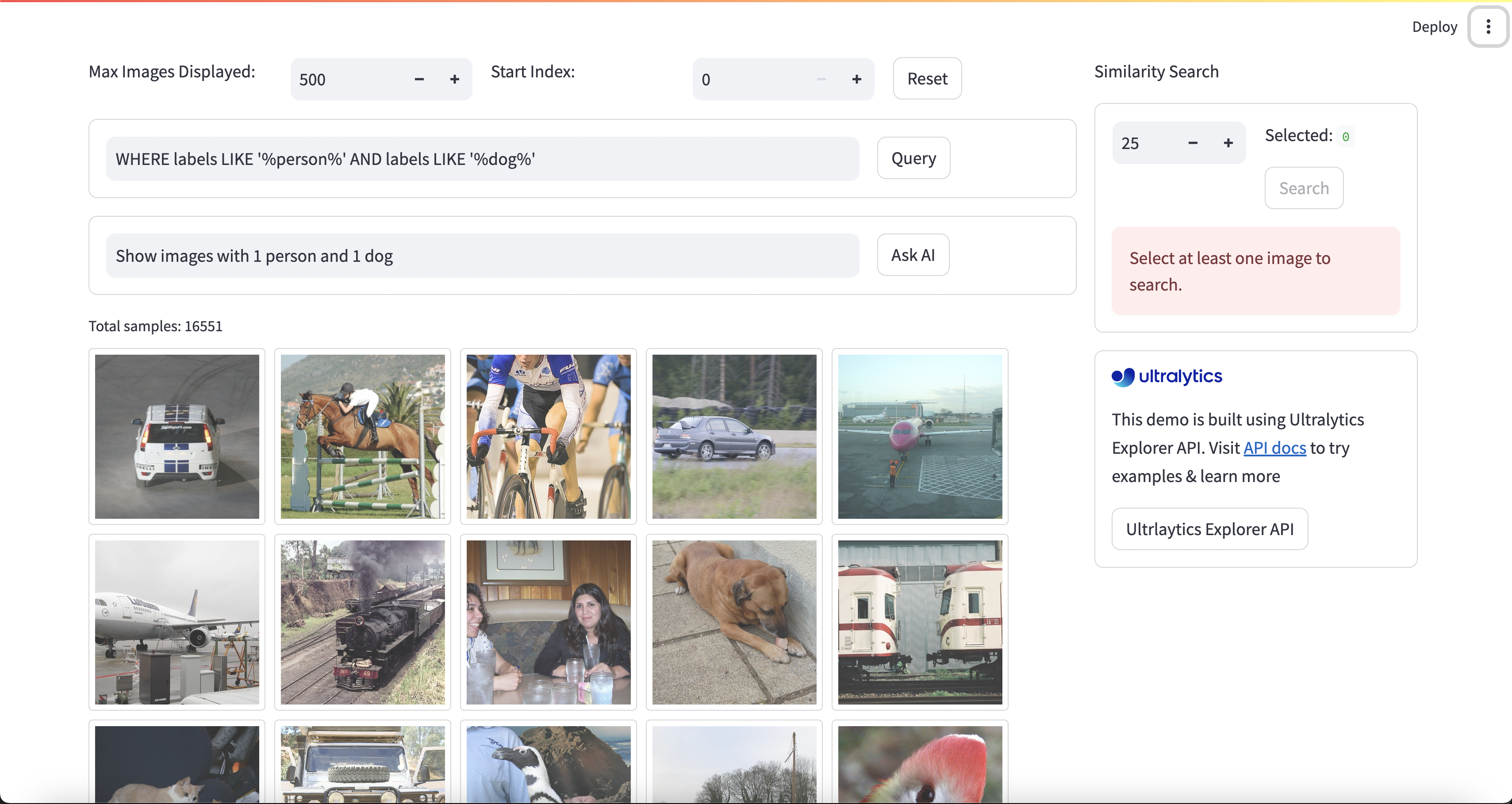

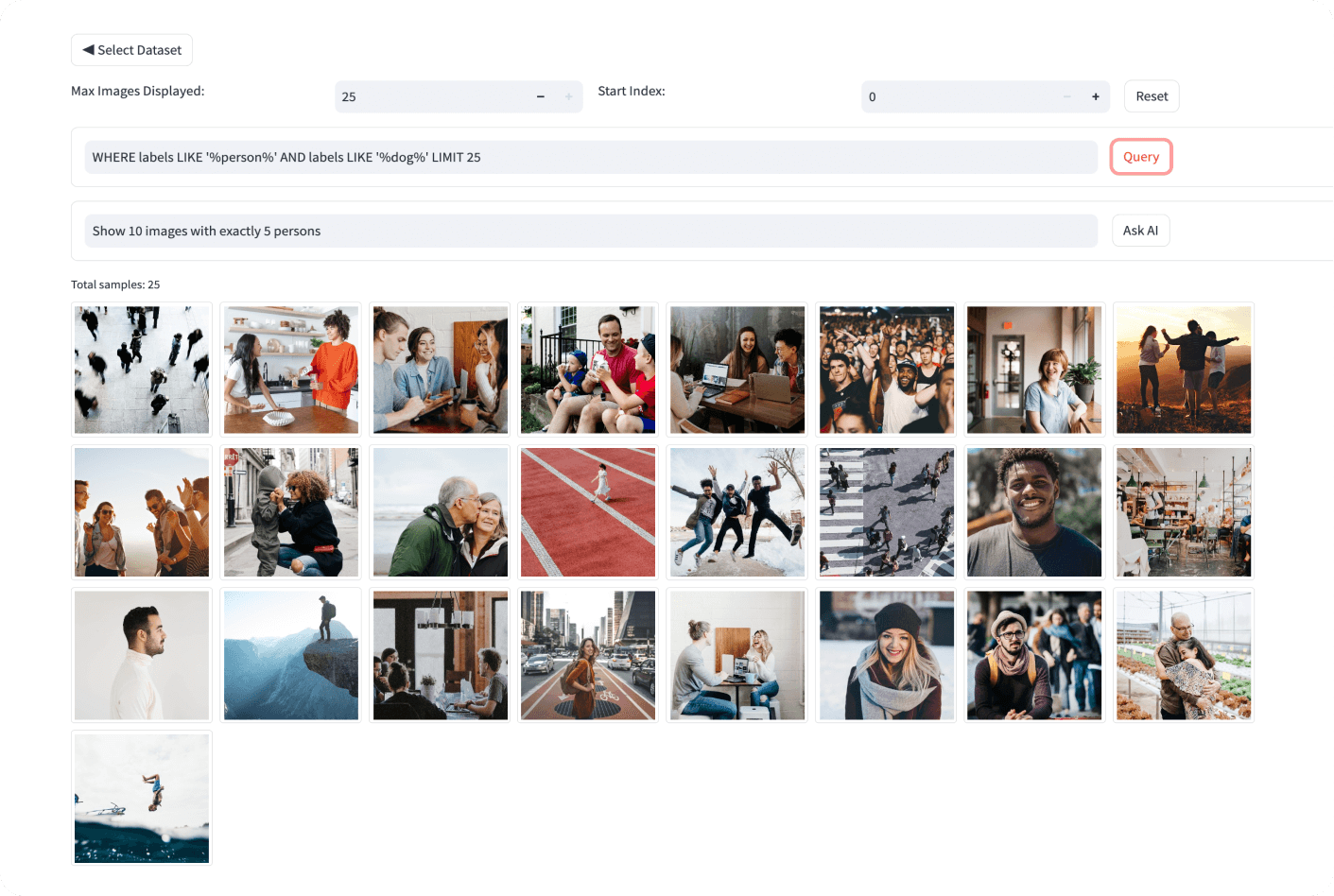

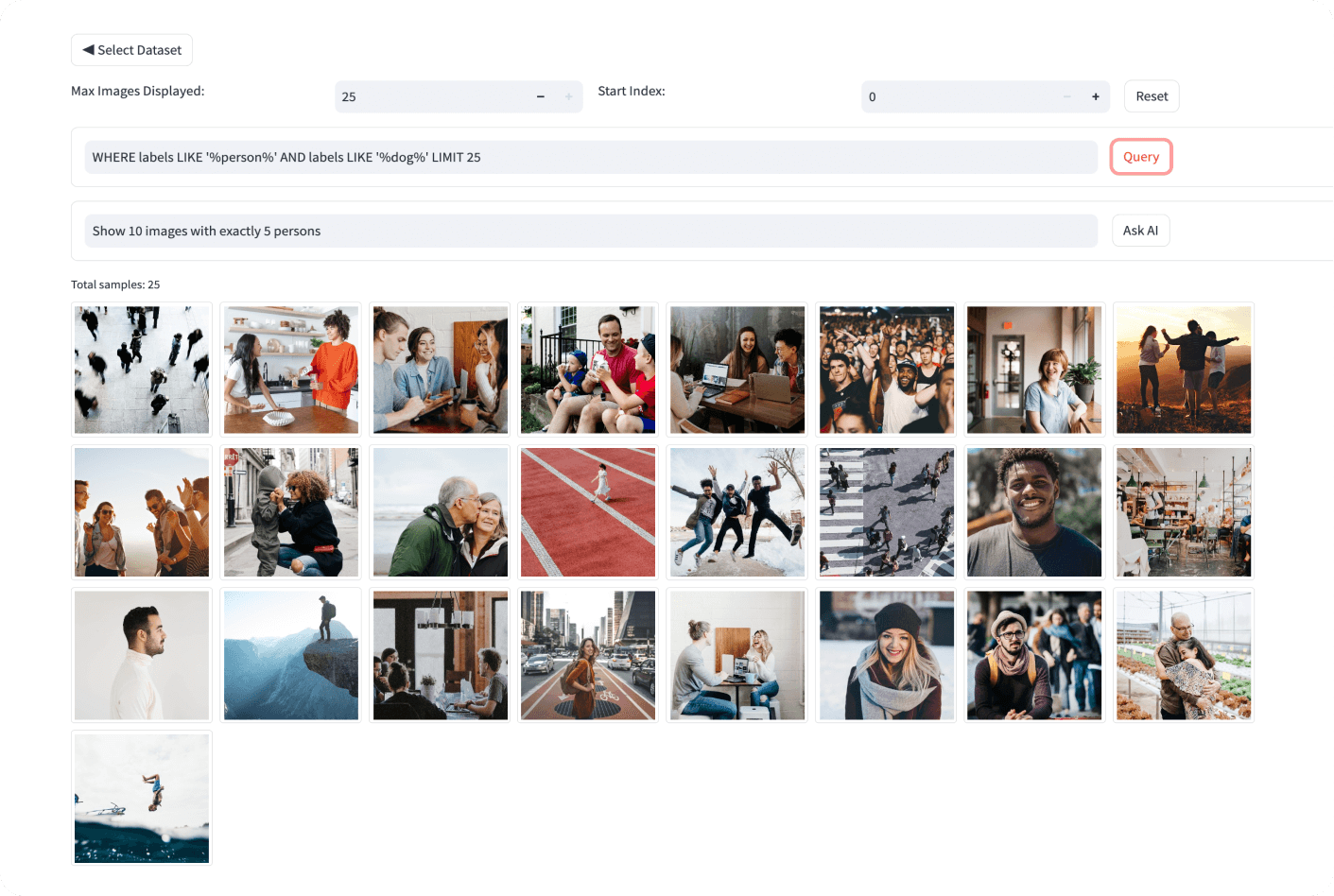

Explorer GUI is like a playground build using [Ultralytics Explorer API](api.md). It allows you to run semantic/vector similarity search, SQL queries and even search using natural language using our ask AI feature powered by LLMs.

- +

+

diff --git a/docs/en/datasets/explorer/index.md b/docs/en/datasets/explorer/index.md

index a55a0f6b..00d5daeb 100644

--- a/docs/en/datasets/explorer/index.md

+++ b/docs/en/datasets/explorer/index.md

@@ -7,7 +7,7 @@ keywords: Ultralytics Explorer, CV Dataset Tools, Semantic Search, SQL Dataset Q

# Ultralytics Explorer

-  +

+

diff --git a/docs/mkdocs.yml b/docs/mkdocs.yml

index 5b112595..ac08e208 100644

--- a/docs/mkdocs.yml

+++ b/docs/mkdocs.yml

@@ -367,6 +367,25 @@ nav:

- Model: hub/sdk/model.md

- Dataset: hub/sdk/dataset.md

- Project: hub/sdk/project.md

+ - Reference:

+ - base:

+ - api_client: hub/sdk/reference/base/api_client.md

+ - auth: hub/sdk/reference/base/auth.md

+ - crud_client: hub/sdk/reference/base/crud_client.md

+ - paginated_list: hub/sdk/reference/base/paginated_list.md

+ - server_clients: hub/sdk/reference/base/server_clients.md

+ - helpers:

+ - error_handler: hub/sdk/reference/helpers/error_handler.md

+ - exceptions: hub/sdk/reference/helpers/exceptions.md

+ - logger: hub/sdk/reference/helpers/logger.md

+ - utils: hub/sdk/reference/helpers/utils.md

+ - hub_client: hub/sdk/reference/hub_client.md

+ - modules:

+ - datasets: hub/sdk/reference/modules/datasets.md

+ - models: hub/sdk/reference/modules/models.md

+ - projects: hub/sdk/reference/modules/projects.md

+ - teams: hub/sdk/reference/modules/teams.md

+ - users: hub/sdk/reference/modules/users.md

- REST API:

- hub/api/index.md

diff --git a/docs/mkdocs_github_authors.yaml b/docs/mkdocs_github_authors.yaml

new file mode 100644

index 00000000..fb94ba75

--- /dev/null

+++ b/docs/mkdocs_github_authors.yaml

@@ -0,0 +1,22 @@

+# Ultralytics YOLO 🚀, AGPL-3.0 license

+# Author list used in docs publication actions

+

+1579093407@qq.com: null

+17216799+ouphi@users.noreply.github.com: ouphi

+17316848+maianumerosky@users.noreply.github.com: maianumerosky

+34196005+fcakyon@users.noreply.github.com: fcakyon

+37276661+capjamesg@users.noreply.github.com: capjamesg

+39910262+ChaoningZhang@users.noreply.github.com: ChaoningZhang

+40165666+berry-ding@users.noreply.github.com: berry-ding

+47978446+sergiuwaxmann@users.noreply.github.com: sergiuwaxmann

+61612323+Laughing-q@users.noreply.github.com: Laughing-q

+62214284+Burhan-Q@users.noreply.github.com: Burhan-Q

+75611662+tensorturtle@users.noreply.github.com: tensorturtle

+abirami.vina@gmail.com: abirami-vina

+ayush.chaurarsia@gmail.com: AyushExel

+chr043416@gmail.com: null

+glenn.jocher@ultralytics.com: glenn-jocher

+muhammadrizwanmunawar123@gmail.com: RizwanMunawar

+not.committed.yet: null

+shuizhuyuanluo@126.com: null

+xinwang614@gmail.com: GreatV

diff --git a/ultralytics/cfg/__init__.py b/ultralytics/cfg/__init__.py

index 6038baf0..8a2de594 100644

--- a/ultralytics/cfg/__init__.py

+++ b/ultralytics/cfg/__init__.py

@@ -317,7 +317,7 @@ def merge_equals_args(args: List[str]) -> List[str]:

args (List[str]): A list of strings where each element is an argument.

Returns:

- List[str]: A list of strings where the arguments around isolated '=' are merged.

+ (List[str]): A list of strings where the arguments around isolated '=' are merged.

"""

new_args = []

for i, arg in enumerate(args):

diff --git a/ultralytics/data/dataset.py b/ultralytics/data/dataset.py

index aecfc2a4..4c4bcebf 100644

--- a/ultralytics/data/dataset.py

+++ b/ultralytics/data/dataset.py

@@ -46,7 +46,8 @@ class YOLODataset(BaseDataset):

Cache dataset labels, check images and read shapes.

Args:

- path (Path): path where to save the cache file (default: Path('./labels.cache')).

+ path (Path): Path where to save the cache file. Default is Path('./labels.cache').

+

Returns:

(dict): labels.

"""

@@ -178,9 +179,13 @@ class YOLODataset(BaseDataset):

self.transforms = self.build_transforms(hyp)

def update_labels_info(self, label):

- """Custom your label format here."""

- # NOTE: cls is not with bboxes now, classification and semantic segmentation need an independent cls label

- # We can make it also support classification and semantic segmentation by add or remove some dict keys there.

+ """

+ Custom your label format here.

+

+ Note:

+ cls is not with bboxes now, classification and semantic segmentation need an independent cls label

+ Can also support classification and semantic segmentation by adding or removing dict keys there.

+ """

bboxes = label.pop("bboxes")

segments = label.pop("segments", [])

keypoints = label.pop("keypoints", None)

diff --git a/ultralytics/engine/results.py b/ultralytics/engine/results.py

index 4faaeb99..5338fe68 100644

--- a/ultralytics/engine/results.py

+++ b/ultralytics/engine/results.py

@@ -667,8 +667,11 @@ class OBB(BaseTensor):

@property

@lru_cache(maxsize=2)

def xyxy(self):

- """Return the horizontal boxes in xyxy format, (N, 4)."""

- # This way to fit both torch and numpy version

+ """

+ Return the horizontal boxes in xyxy format, (N, 4).

+

+ Accepts both torch and numpy boxes.

+ """

x1 = self.xyxyxyxy[..., 0].min(1).values

x2 = self.xyxyxyxy[..., 0].max(1).values

y1 = self.xyxyxyxy[..., 1].min(1).values

diff --git a/ultralytics/engine/trainer.py b/ultralytics/engine/trainer.py

index 75b3ea3f..71ea5e84 100644

--- a/ultralytics/engine/trainer.py

+++ b/ultralytics/engine/trainer.py

@@ -563,8 +563,12 @@ class BaseTrainer:

raise NotImplementedError("build_dataset function not implemented in trainer")

def label_loss_items(self, loss_items=None, prefix="train"):

- """Returns a loss dict with labelled training loss items tensor."""

- # Not needed for classification but necessary for segmentation & detection

+ """

+ Returns a loss dict with labelled training loss items tensor.

+

+ Note:

+ This is not needed for classification but necessary for segmentation & detection

+ """

return {"loss": loss_items} if loss_items is not None else ["loss"]

def set_model_attributes(self):

diff --git a/ultralytics/hub/__init__.py b/ultralytics/hub/__init__.py

index 745d4a94..6c8afc6f 100644

--- a/ultralytics/hub/__init__.py

+++ b/ultralytics/hub/__init__.py

@@ -12,13 +12,16 @@ def login(api_key: str = None, save=True) -> bool:

"""

Log in to the Ultralytics HUB API using the provided API key.

- The session is not stored; a new session is created when needed using the saved SETTINGS or the HUB_API_KEY environment variable if successfully authenticated.

+ The session is not stored; a new session is created when needed using the saved SETTINGS or the HUB_API_KEY

+ environment variable if successfully authenticated.

Args:

- api_key (str, optional): The API key to use for authentication. If not provided, it will be retrieved from SETTINGS or HUB_API_KEY environment variable.

+ api_key (str, optional): API key to use for authentication.

+ If not provided, it will be retrieved from SETTINGS or HUB_API_KEY environment variable.

save (bool, optional): Whether to save the API key to SETTINGS if authentication is successful.

+

Returns:

- bool: True if authentication is successful, False otherwise.

+ (bool): True if authentication is successful, False otherwise.

"""

checks.check_requirements("hub-sdk>=0.0.2")

from hub_sdk import HUBClient

diff --git a/ultralytics/hub/auth.py b/ultralytics/hub/auth.py

index 17bb4986..340f5841 100644

--- a/ultralytics/hub/auth.py

+++ b/ultralytics/hub/auth.py

@@ -87,7 +87,7 @@ class Auth:

Attempt to authenticate with the server using either id_token or API key.

Returns:

- bool: True if authentication is successful, False otherwise.

+ (bool): True if authentication is successful, False otherwise.

"""

try:

if header := self.get_auth_header():

@@ -107,7 +107,7 @@ class Auth:

supported browser.

Returns:

- bool: True if authentication is successful, False otherwise.

+ (bool): True if authentication is successful, False otherwise.

"""

if not is_colab():

return False # Currently only works with Colab

diff --git a/ultralytics/hub/session.py b/ultralytics/hub/session.py

index 8a4d8c59..2d26b5a9 100644

--- a/ultralytics/hub/session.py

+++ b/ultralytics/hub/session.py

@@ -277,7 +277,7 @@ class HUBTrainingSession:

timeout: The maximum timeout duration.

Returns:

- str: The retry message.

+ (str): The retry message.

"""

if self._should_retry(response.status_code):

return f"Retrying {retry}x for {timeout}s." if retry else ""

@@ -341,7 +341,7 @@ class HUBTrainingSession:

response (requests.Response): The response object from the file download request.

Returns:

- (None)

+ None

"""

with TQDM(total=content_length, unit="B", unit_scale=True, unit_divisor=1024) as pbar:

for data in response.iter_content(chunk_size=1024):

diff --git a/ultralytics/models/sam/amg.py b/ultralytics/models/sam/amg.py

index c4bb6d1b..128108fe 100644

--- a/ultralytics/models/sam/amg.py

+++ b/ultralytics/models/sam/amg.py

@@ -35,9 +35,11 @@ def calculate_stability_score(masks: torch.Tensor, mask_threshold: float, thresh

The stability score is the IoU between the binary masks obtained by thresholding the predicted mask logits at high

and low values.

+

+ Notes:

+ - One mask is always contained inside the other.

+ - Save memory by preventing unnecessary cast to torch.int64

"""

- # One mask is always contained inside the other.

- # Save memory by preventing unnecessary cast to torch.int64

intersections = (masks > (mask_threshold + threshold_offset)).sum(-1, dtype=torch.int16).sum(-1, dtype=torch.int32)

unions = (masks > (mask_threshold - threshold_offset)).sum(-1, dtype=torch.int16).sum(-1, dtype=torch.int32)

return intersections / unions

diff --git a/ultralytics/models/yolo/segment/val.py b/ultralytics/models/yolo/segment/val.py

index 72c04b2c..94757c4e 100644

--- a/ultralytics/models/yolo/segment/val.py

+++ b/ultralytics/models/yolo/segment/val.py

@@ -215,8 +215,12 @@ class SegmentationValidator(DetectionValidator):

self.plot_masks.clear()

def pred_to_json(self, predn, filename, pred_masks):

- """Save one JSON result."""

- # Example result = {"image_id": 42, "category_id": 18, "bbox": [258.15, 41.29, 348.26, 243.78], "score": 0.236}

+ """

+ Save one JSON result.

+

+ Examples:

+ >>> result = {"image_id": 42, "category_id": 18, "bbox": [258.15, 41.29, 348.26, 243.78], "score": 0.236}

+ """

from pycocotools.mask import encode # noqa

def single_encode(x):

diff --git a/ultralytics/nn/autobackend.py b/ultralytics/nn/autobackend.py

index 8f55c3ba..3fafbbd9 100644

--- a/ultralytics/nn/autobackend.py

+++ b/ultralytics/nn/autobackend.py

@@ -508,9 +508,6 @@ class AutoBackend(nn.Module):

Args:

imgsz (tuple): The shape of the dummy input tensor in the format (batch_size, channels, height, width)

-

- Returns:

- (None): This method runs the forward pass and don't return any value

"""

warmup_types = self.pt, self.jit, self.onnx, self.engine, self.saved_model, self.pb, self.triton, self.nn_module

if any(warmup_types) and (self.device.type != "cpu" or self.triton):

@@ -521,13 +518,16 @@ class AutoBackend(nn.Module):

@staticmethod

def _model_type(p="path/to/model.pt"):

"""

- This function takes a path to a model file and returns the model type.

+ This function takes a path to a model file and returns the model type. Possibles types are pt, jit, onnx, xml,

+ engine, coreml, saved_model, pb, tflite, edgetpu, tfjs, ncnn or paddle.

Args:

p: path to the model file. Defaults to path/to/model.pt

+

+ Examples:

+ >>> model = AutoBackend(weights="path/to/model.onnx")

+ >>> model_type = model._model_type() # returns "onnx"

"""

- # Return model type from model path, i.e. path='path/to/model.onnx' -> type=onnx

- # types = [pt, jit, onnx, xml, engine, coreml, saved_model, pb, tflite, edgetpu, tfjs, paddle]

from ultralytics.engine.exporter import export_formats

sf = list(export_formats().Suffix) # export suffixes

diff --git a/ultralytics/solutions/object_counter.py b/ultralytics/solutions/object_counter.py

index 98231c3c..e06c5a25 100644

--- a/ultralytics/solutions/object_counter.py

+++ b/ultralytics/solutions/object_counter.py

@@ -136,7 +136,6 @@ class ObjectCounter:

cv2.EVENT_FLAG_SHIFTKEY, etc.).

params (dict): Additional parameters you may want to pass to the function.

"""

- # global is_drawing, selected_point

if event == cv2.EVENT_LBUTTONDOWN:

for i, point in enumerate(self.reg_pts):

if (

diff --git a/ultralytics/utils/__init__.py b/ultralytics/utils/__init__.py

index 07641c65..d8cfccaa 100644

--- a/ultralytics/utils/__init__.py

+++ b/ultralytics/utils/__init__.py

@@ -116,8 +116,11 @@ class TQDM(tqdm_original):

"""

def __init__(self, *args, **kwargs):

- """Initialize custom Ultralytics tqdm class with different default arguments."""

- # Set new default values (these can still be overridden when calling TQDM)

+ """

+ Initialize custom Ultralytics tqdm class with different default arguments.

+

+ Note these can still be overridden when calling TQDM.

+ """

kwargs["disable"] = not VERBOSE or kwargs.get("disable", False) # logical 'and' with default value if passed

kwargs.setdefault("bar_format", TQDM_BAR_FORMAT) # override default value if passed

super().__init__(*args, **kwargs)

@@ -377,7 +380,7 @@ def yaml_print(yaml_file: Union[str, Path, dict]) -> None:

yaml_file: The file path of the YAML file or a YAML-formatted dictionary.

Returns:

- None

+ (None)

"""

yaml_dict = yaml_load(yaml_file) if isinstance(yaml_file, (str, Path)) else yaml_file

dump = yaml.dump(yaml_dict, sort_keys=False, allow_unicode=True)

@@ -610,7 +613,7 @@ def get_ubuntu_version():

def get_user_config_dir(sub_dir="Ultralytics"):

"""

- Get the user config directory.

+ Return the appropriate config directory based on the environment operating system.

Args:

sub_dir (str): The name of the subdirectory to create.

@@ -618,7 +621,6 @@ def get_user_config_dir(sub_dir="Ultralytics"):

Returns:

(Path): The path to the user config directory.

"""

- # Return the appropriate config directory for each operating system

if WINDOWS:

path = Path.home() / "AppData" / "Roaming" / sub_dir

elif MACOS: # macOS

diff --git a/ultralytics/utils/benchmarks.py b/ultralytics/utils/benchmarks.py

index dbb95183..6a3b1762 100644

--- a/ultralytics/utils/benchmarks.py

+++ b/ultralytics/utils/benchmarks.py

@@ -258,8 +258,7 @@ class ProfileModels:

"""Retrieves the information including number of layers, parameters, gradients and FLOPs for an ONNX model

file.

"""

- # return (num_layers, num_params, num_gradients, num_flops)

- return 0.0, 0.0, 0.0, 0.0

+ return 0.0, 0.0, 0.0, 0.0 # return (num_layers, num_params, num_gradients, num_flops)

def iterative_sigma_clipping(self, data, sigma=2, max_iters=3):

"""Applies an iterative sigma clipping algorithm to the given data times number of iterations."""

diff --git a/ultralytics/utils/checks.py b/ultralytics/utils/checks.py

index ea4f757d..0931f4cf 100644

--- a/ultralytics/utils/checks.py

+++ b/ultralytics/utils/checks.py

@@ -109,7 +109,7 @@ def is_ascii(s) -> bool:

s (str): String to be checked.

Returns:

- bool: True if the string is composed only of ASCII characters, False otherwise.

+ (bool): True if the string is composed only of ASCII characters, False otherwise.

"""

# Convert list, tuple, None, etc. to string

s = str(s)

@@ -327,7 +327,7 @@ def check_python(minimum: str = "3.8.0") -> bool:

minimum (str): Required minimum version of python.

Returns:

- None

+ (bool): Whether the installed Python version meets the minimum constraints.

"""

return check_version(platform.python_version(), minimum, name="Python ", hard=True)

diff --git a/ultralytics/utils/loss.py b/ultralytics/utils/loss.py

index af3d240f..28dee2ef 100644

--- a/ultralytics/utils/loss.py

+++ b/ultralytics/utils/loss.py

@@ -87,8 +87,12 @@ class BboxLoss(nn.Module):

@staticmethod

def _df_loss(pred_dist, target):

- """Return sum of left and right DFL losses."""

- # Distribution Focal Loss (DFL) proposed in Generalized Focal Loss https://ieeexplore.ieee.org/document/9792391

+ """

+ Return sum of left and right DFL losses.

+

+ Distribution Focal Loss (DFL) proposed in Generalized Focal Loss

+ https://ieeexplore.ieee.org/document/9792391

+ """

tl = target.long() # target left

tr = tl + 1 # target right

wl = tr - target # weight left

@@ -696,6 +700,7 @@ class v8OBBLoss(v8DetectionLoss):

anchor_points (torch.Tensor): Anchor points, (h*w, 2).

pred_dist (torch.Tensor): Predicted rotated distance, (bs, h*w, 4).

pred_angle (torch.Tensor): Predicted angle, (bs, h*w, 1).

+

Returns:

(torch.Tensor): Predicted rotated bounding boxes with angles, (bs, h*w, 5).

"""

diff --git a/ultralytics/utils/metrics.py b/ultralytics/utils/metrics.py

index f10ae329..7a3e9d32 100644

--- a/ultralytics/utils/metrics.py

+++ b/ultralytics/utils/metrics.py

@@ -180,7 +180,7 @@ def _get_covariance_matrix(boxes):

Returns:

(torch.Tensor): Covariance metrixs corresponding to original rotated bounding boxes.

"""

- # Gaussian bounding boxes, ignored the center points(the first two columns) cause it's not needed here.

+ # Gaussian bounding boxes, ignore the center points (the first two columns) because they are not needed here.

gbbs = torch.cat((torch.pow(boxes[:, 2:4], 2) / 12, boxes[:, 4:]), dim=-1)

a, b, c = gbbs.split(1, dim=-1)

return (

diff --git a/ultralytics/utils/ops.py b/ultralytics/utils/ops.py

index 5f02dabc..c569a3cb 100644

--- a/ultralytics/utils/ops.py

+++ b/ultralytics/utils/ops.py

@@ -75,7 +75,6 @@ def segment2box(segment, width=640, height=640):

Returns:

(np.ndarray): the minimum and maximum x and y values of the segment.

"""

- # Convert 1 segment label to 1 box label, applying inside-image constraint, i.e. (xy1, xy2, ...) to (xyxy)

x, y = segment.T # segment xy

inside = (x >= 0) & (y >= 0) & (x <= width) & (y <= height)

x = x[inside]

diff --git a/ultralytics/utils/plotting.py b/ultralytics/utils/plotting.py

index 3028ed14..629de701 100644

--- a/ultralytics/utils/plotting.py

+++ b/ultralytics/utils/plotting.py

@@ -388,6 +388,7 @@ class Annotator:

a (float) : The value of pose point a

b (float): The value of pose point b

c (float): The value o pose point c

+

Returns:

angle (degree): Degree value of angle between three points

"""

diff --git a/ultralytics/utils/triton.py b/ultralytics/utils/triton.py

index 98c4be20..3f873a6f 100644

--- a/ultralytics/utils/triton.py

+++ b/ultralytics/utils/triton.py

@@ -75,7 +75,7 @@ class TritonRemoteModel:

*inputs (List[np.ndarray]): Input data to the model.

Returns:

- List[np.ndarray]: Model outputs.

+ (List[np.ndarray]): Model outputs.

"""

infer_inputs = []

input_format = inputs[0].dtype

diff --git a/ultralytics/utils/tuner.py b/ultralytics/utils/tuner.py

index db7d4907..20e3b756 100644

--- a/ultralytics/utils/tuner.py

+++ b/ultralytics/utils/tuner.py

@@ -94,7 +94,7 @@ def run_ray_tune(

config (dict): A dictionary of hyperparameters to use for training.

Returns:

- None.

+ None

"""

model_to_train = ray.get(model_in_store) # get the model from ray store for tuning

model_to_train.reset_callbacks()

diff --git a/docs/mkdocs.yml b/docs/mkdocs.yml

index 5b112595..ac08e208 100644

--- a/docs/mkdocs.yml

+++ b/docs/mkdocs.yml

@@ -367,6 +367,25 @@ nav:

- Model: hub/sdk/model.md

- Dataset: hub/sdk/dataset.md

- Project: hub/sdk/project.md

+ - Reference:

+ - base:

+ - api_client: hub/sdk/reference/base/api_client.md

+ - auth: hub/sdk/reference/base/auth.md

+ - crud_client: hub/sdk/reference/base/crud_client.md

+ - paginated_list: hub/sdk/reference/base/paginated_list.md

+ - server_clients: hub/sdk/reference/base/server_clients.md

+ - helpers:

+ - error_handler: hub/sdk/reference/helpers/error_handler.md

+ - exceptions: hub/sdk/reference/helpers/exceptions.md

+ - logger: hub/sdk/reference/helpers/logger.md

+ - utils: hub/sdk/reference/helpers/utils.md

+ - hub_client: hub/sdk/reference/hub_client.md

+ - modules:

+ - datasets: hub/sdk/reference/modules/datasets.md

+ - models: hub/sdk/reference/modules/models.md

+ - projects: hub/sdk/reference/modules/projects.md

+ - teams: hub/sdk/reference/modules/teams.md

+ - users: hub/sdk/reference/modules/users.md

- REST API:

- hub/api/index.md

diff --git a/docs/mkdocs_github_authors.yaml b/docs/mkdocs_github_authors.yaml

new file mode 100644

index 00000000..fb94ba75

--- /dev/null

+++ b/docs/mkdocs_github_authors.yaml

@@ -0,0 +1,22 @@

+# Ultralytics YOLO 🚀, AGPL-3.0 license

+# Author list used in docs publication actions

+

+1579093407@qq.com: null

+17216799+ouphi@users.noreply.github.com: ouphi

+17316848+maianumerosky@users.noreply.github.com: maianumerosky

+34196005+fcakyon@users.noreply.github.com: fcakyon

+37276661+capjamesg@users.noreply.github.com: capjamesg

+39910262+ChaoningZhang@users.noreply.github.com: ChaoningZhang

+40165666+berry-ding@users.noreply.github.com: berry-ding

+47978446+sergiuwaxmann@users.noreply.github.com: sergiuwaxmann

+61612323+Laughing-q@users.noreply.github.com: Laughing-q

+62214284+Burhan-Q@users.noreply.github.com: Burhan-Q

+75611662+tensorturtle@users.noreply.github.com: tensorturtle

+abirami.vina@gmail.com: abirami-vina

+ayush.chaurarsia@gmail.com: AyushExel

+chr043416@gmail.com: null

+glenn.jocher@ultralytics.com: glenn-jocher

+muhammadrizwanmunawar123@gmail.com: RizwanMunawar

+not.committed.yet: null

+shuizhuyuanluo@126.com: null

+xinwang614@gmail.com: GreatV

diff --git a/ultralytics/cfg/__init__.py b/ultralytics/cfg/__init__.py

index 6038baf0..8a2de594 100644

--- a/ultralytics/cfg/__init__.py

+++ b/ultralytics/cfg/__init__.py

@@ -317,7 +317,7 @@ def merge_equals_args(args: List[str]) -> List[str]:

args (List[str]): A list of strings where each element is an argument.

Returns:

- List[str]: A list of strings where the arguments around isolated '=' are merged.

+ (List[str]): A list of strings where the arguments around isolated '=' are merged.

"""

new_args = []

for i, arg in enumerate(args):

diff --git a/ultralytics/data/dataset.py b/ultralytics/data/dataset.py

index aecfc2a4..4c4bcebf 100644

--- a/ultralytics/data/dataset.py

+++ b/ultralytics/data/dataset.py

@@ -46,7 +46,8 @@ class YOLODataset(BaseDataset):

Cache dataset labels, check images and read shapes.

Args:

- path (Path): path where to save the cache file (default: Path('./labels.cache')).

+ path (Path): Path where to save the cache file. Default is Path('./labels.cache').

+

Returns:

(dict): labels.

"""

@@ -178,9 +179,13 @@ class YOLODataset(BaseDataset):

self.transforms = self.build_transforms(hyp)

def update_labels_info(self, label):

- """Custom your label format here."""

- # NOTE: cls is not with bboxes now, classification and semantic segmentation need an independent cls label

- # We can make it also support classification and semantic segmentation by add or remove some dict keys there.

+ """

+ Custom your label format here.

+

+ Note:

+ cls is not with bboxes now, classification and semantic segmentation need an independent cls label

+ Can also support classification and semantic segmentation by adding or removing dict keys there.

+ """

bboxes = label.pop("bboxes")

segments = label.pop("segments", [])

keypoints = label.pop("keypoints", None)

diff --git a/ultralytics/engine/results.py b/ultralytics/engine/results.py

index 4faaeb99..5338fe68 100644

--- a/ultralytics/engine/results.py

+++ b/ultralytics/engine/results.py

@@ -667,8 +667,11 @@ class OBB(BaseTensor):

@property

@lru_cache(maxsize=2)

def xyxy(self):

- """Return the horizontal boxes in xyxy format, (N, 4)."""

- # This way to fit both torch and numpy version

+ """

+ Return the horizontal boxes in xyxy format, (N, 4).

+

+ Accepts both torch and numpy boxes.

+ """

x1 = self.xyxyxyxy[..., 0].min(1).values

x2 = self.xyxyxyxy[..., 0].max(1).values

y1 = self.xyxyxyxy[..., 1].min(1).values

diff --git a/ultralytics/engine/trainer.py b/ultralytics/engine/trainer.py

index 75b3ea3f..71ea5e84 100644

--- a/ultralytics/engine/trainer.py

+++ b/ultralytics/engine/trainer.py

@@ -563,8 +563,12 @@ class BaseTrainer:

raise NotImplementedError("build_dataset function not implemented in trainer")

def label_loss_items(self, loss_items=None, prefix="train"):

- """Returns a loss dict with labelled training loss items tensor."""

- # Not needed for classification but necessary for segmentation & detection

+ """

+ Returns a loss dict with labelled training loss items tensor.

+

+ Note:

+ This is not needed for classification but necessary for segmentation & detection

+ """

return {"loss": loss_items} if loss_items is not None else ["loss"]

def set_model_attributes(self):

diff --git a/ultralytics/hub/__init__.py b/ultralytics/hub/__init__.py

index 745d4a94..6c8afc6f 100644

--- a/ultralytics/hub/__init__.py

+++ b/ultralytics/hub/__init__.py

@@ -12,13 +12,16 @@ def login(api_key: str = None, save=True) -> bool:

"""

Log in to the Ultralytics HUB API using the provided API key.

- The session is not stored; a new session is created when needed using the saved SETTINGS or the HUB_API_KEY environment variable if successfully authenticated.

+ The session is not stored; a new session is created when needed using the saved SETTINGS or the HUB_API_KEY

+ environment variable if successfully authenticated.

Args:

- api_key (str, optional): The API key to use for authentication. If not provided, it will be retrieved from SETTINGS or HUB_API_KEY environment variable.

+ api_key (str, optional): API key to use for authentication.

+ If not provided, it will be retrieved from SETTINGS or HUB_API_KEY environment variable.

save (bool, optional): Whether to save the API key to SETTINGS if authentication is successful.

+

Returns:

- bool: True if authentication is successful, False otherwise.

+ (bool): True if authentication is successful, False otherwise.

"""

checks.check_requirements("hub-sdk>=0.0.2")

from hub_sdk import HUBClient

diff --git a/ultralytics/hub/auth.py b/ultralytics/hub/auth.py

index 17bb4986..340f5841 100644

--- a/ultralytics/hub/auth.py

+++ b/ultralytics/hub/auth.py

@@ -87,7 +87,7 @@ class Auth:

Attempt to authenticate with the server using either id_token or API key.

Returns:

- bool: True if authentication is successful, False otherwise.

+ (bool): True if authentication is successful, False otherwise.

"""

try:

if header := self.get_auth_header():

@@ -107,7 +107,7 @@ class Auth:

supported browser.

Returns:

- bool: True if authentication is successful, False otherwise.

+ (bool): True if authentication is successful, False otherwise.

"""

if not is_colab():

return False # Currently only works with Colab

diff --git a/ultralytics/hub/session.py b/ultralytics/hub/session.py

index 8a4d8c59..2d26b5a9 100644

--- a/ultralytics/hub/session.py

+++ b/ultralytics/hub/session.py

@@ -277,7 +277,7 @@ class HUBTrainingSession:

timeout: The maximum timeout duration.

Returns:

- str: The retry message.

+ (str): The retry message.

"""

if self._should_retry(response.status_code):

return f"Retrying {retry}x for {timeout}s." if retry else ""

@@ -341,7 +341,7 @@ class HUBTrainingSession:

response (requests.Response): The response object from the file download request.

Returns:

- (None)

+ None

"""

with TQDM(total=content_length, unit="B", unit_scale=True, unit_divisor=1024) as pbar:

for data in response.iter_content(chunk_size=1024):

diff --git a/ultralytics/models/sam/amg.py b/ultralytics/models/sam/amg.py

index c4bb6d1b..128108fe 100644

--- a/ultralytics/models/sam/amg.py

+++ b/ultralytics/models/sam/amg.py

@@ -35,9 +35,11 @@ def calculate_stability_score(masks: torch.Tensor, mask_threshold: float, thresh

The stability score is the IoU between the binary masks obtained by thresholding the predicted mask logits at high

and low values.

+

+ Notes:

+ - One mask is always contained inside the other.

+ - Save memory by preventing unnecessary cast to torch.int64

"""

- # One mask is always contained inside the other.

- # Save memory by preventing unnecessary cast to torch.int64

intersections = (masks > (mask_threshold + threshold_offset)).sum(-1, dtype=torch.int16).sum(-1, dtype=torch.int32)

unions = (masks > (mask_threshold - threshold_offset)).sum(-1, dtype=torch.int16).sum(-1, dtype=torch.int32)

return intersections / unions

diff --git a/ultralytics/models/yolo/segment/val.py b/ultralytics/models/yolo/segment/val.py

index 72c04b2c..94757c4e 100644

--- a/ultralytics/models/yolo/segment/val.py

+++ b/ultralytics/models/yolo/segment/val.py

@@ -215,8 +215,12 @@ class SegmentationValidator(DetectionValidator):

self.plot_masks.clear()

def pred_to_json(self, predn, filename, pred_masks):

- """Save one JSON result."""

- # Example result = {"image_id": 42, "category_id": 18, "bbox": [258.15, 41.29, 348.26, 243.78], "score": 0.236}

+ """

+ Save one JSON result.

+

+ Examples:

+ >>> result = {"image_id": 42, "category_id": 18, "bbox": [258.15, 41.29, 348.26, 243.78], "score": 0.236}

+ """

from pycocotools.mask import encode # noqa

def single_encode(x):

diff --git a/ultralytics/nn/autobackend.py b/ultralytics/nn/autobackend.py

index 8f55c3ba..3fafbbd9 100644

--- a/ultralytics/nn/autobackend.py

+++ b/ultralytics/nn/autobackend.py

@@ -508,9 +508,6 @@ class AutoBackend(nn.Module):

Args:

imgsz (tuple): The shape of the dummy input tensor in the format (batch_size, channels, height, width)

-

- Returns:

- (None): This method runs the forward pass and don't return any value

"""

warmup_types = self.pt, self.jit, self.onnx, self.engine, self.saved_model, self.pb, self.triton, self.nn_module

if any(warmup_types) and (self.device.type != "cpu" or self.triton):

@@ -521,13 +518,16 @@ class AutoBackend(nn.Module):

@staticmethod

def _model_type(p="path/to/model.pt"):

"""

- This function takes a path to a model file and returns the model type.

+ This function takes a path to a model file and returns the model type. Possibles types are pt, jit, onnx, xml,

+ engine, coreml, saved_model, pb, tflite, edgetpu, tfjs, ncnn or paddle.

Args:

p: path to the model file. Defaults to path/to/model.pt

+

+ Examples:

+ >>> model = AutoBackend(weights="path/to/model.onnx")

+ >>> model_type = model._model_type() # returns "onnx"

"""

- # Return model type from model path, i.e. path='path/to/model.onnx' -> type=onnx

- # types = [pt, jit, onnx, xml, engine, coreml, saved_model, pb, tflite, edgetpu, tfjs, paddle]

from ultralytics.engine.exporter import export_formats

sf = list(export_formats().Suffix) # export suffixes

diff --git a/ultralytics/solutions/object_counter.py b/ultralytics/solutions/object_counter.py

index 98231c3c..e06c5a25 100644

--- a/ultralytics/solutions/object_counter.py

+++ b/ultralytics/solutions/object_counter.py

@@ -136,7 +136,6 @@ class ObjectCounter:

cv2.EVENT_FLAG_SHIFTKEY, etc.).

params (dict): Additional parameters you may want to pass to the function.

"""

- # global is_drawing, selected_point

if event == cv2.EVENT_LBUTTONDOWN:

for i, point in enumerate(self.reg_pts):

if (

diff --git a/ultralytics/utils/__init__.py b/ultralytics/utils/__init__.py

index 07641c65..d8cfccaa 100644

--- a/ultralytics/utils/__init__.py

+++ b/ultralytics/utils/__init__.py

@@ -116,8 +116,11 @@ class TQDM(tqdm_original):

"""

def __init__(self, *args, **kwargs):

- """Initialize custom Ultralytics tqdm class with different default arguments."""

- # Set new default values (these can still be overridden when calling TQDM)

+ """

+ Initialize custom Ultralytics tqdm class with different default arguments.

+

+ Note these can still be overridden when calling TQDM.

+ """

kwargs["disable"] = not VERBOSE or kwargs.get("disable", False) # logical 'and' with default value if passed

kwargs.setdefault("bar_format", TQDM_BAR_FORMAT) # override default value if passed

super().__init__(*args, **kwargs)

@@ -377,7 +380,7 @@ def yaml_print(yaml_file: Union[str, Path, dict]) -> None:

yaml_file: The file path of the YAML file or a YAML-formatted dictionary.

Returns:

- None

+ (None)

"""

yaml_dict = yaml_load(yaml_file) if isinstance(yaml_file, (str, Path)) else yaml_file

dump = yaml.dump(yaml_dict, sort_keys=False, allow_unicode=True)

@@ -610,7 +613,7 @@ def get_ubuntu_version():

def get_user_config_dir(sub_dir="Ultralytics"):

"""

- Get the user config directory.

+ Return the appropriate config directory based on the environment operating system.

Args:

sub_dir (str): The name of the subdirectory to create.

@@ -618,7 +621,6 @@ def get_user_config_dir(sub_dir="Ultralytics"):

Returns:

(Path): The path to the user config directory.

"""

- # Return the appropriate config directory for each operating system

if WINDOWS:

path = Path.home() / "AppData" / "Roaming" / sub_dir

elif MACOS: # macOS

diff --git a/ultralytics/utils/benchmarks.py b/ultralytics/utils/benchmarks.py

index dbb95183..6a3b1762 100644

--- a/ultralytics/utils/benchmarks.py

+++ b/ultralytics/utils/benchmarks.py

@@ -258,8 +258,7 @@ class ProfileModels:

"""Retrieves the information including number of layers, parameters, gradients and FLOPs for an ONNX model

file.

"""

- # return (num_layers, num_params, num_gradients, num_flops)

- return 0.0, 0.0, 0.0, 0.0

+ return 0.0, 0.0, 0.0, 0.0 # return (num_layers, num_params, num_gradients, num_flops)

def iterative_sigma_clipping(self, data, sigma=2, max_iters=3):

"""Applies an iterative sigma clipping algorithm to the given data times number of iterations."""

diff --git a/ultralytics/utils/checks.py b/ultralytics/utils/checks.py

index ea4f757d..0931f4cf 100644

--- a/ultralytics/utils/checks.py

+++ b/ultralytics/utils/checks.py

@@ -109,7 +109,7 @@ def is_ascii(s) -> bool:

s (str): String to be checked.

Returns:

- bool: True if the string is composed only of ASCII characters, False otherwise.

+ (bool): True if the string is composed only of ASCII characters, False otherwise.

"""

# Convert list, tuple, None, etc. to string

s = str(s)

@@ -327,7 +327,7 @@ def check_python(minimum: str = "3.8.0") -> bool:

minimum (str): Required minimum version of python.

Returns:

- None

+ (bool): Whether the installed Python version meets the minimum constraints.

"""

return check_version(platform.python_version(), minimum, name="Python ", hard=True)

diff --git a/ultralytics/utils/loss.py b/ultralytics/utils/loss.py

index af3d240f..28dee2ef 100644

--- a/ultralytics/utils/loss.py

+++ b/ultralytics/utils/loss.py

@@ -87,8 +87,12 @@ class BboxLoss(nn.Module):

@staticmethod

def _df_loss(pred_dist, target):

- """Return sum of left and right DFL losses."""

- # Distribution Focal Loss (DFL) proposed in Generalized Focal Loss https://ieeexplore.ieee.org/document/9792391

+ """

+ Return sum of left and right DFL losses.

+

+ Distribution Focal Loss (DFL) proposed in Generalized Focal Loss

+ https://ieeexplore.ieee.org/document/9792391

+ """

tl = target.long() # target left

tr = tl + 1 # target right

wl = tr - target # weight left

@@ -696,6 +700,7 @@ class v8OBBLoss(v8DetectionLoss):

anchor_points (torch.Tensor): Anchor points, (h*w, 2).

pred_dist (torch.Tensor): Predicted rotated distance, (bs, h*w, 4).

pred_angle (torch.Tensor): Predicted angle, (bs, h*w, 1).

+

Returns:

(torch.Tensor): Predicted rotated bounding boxes with angles, (bs, h*w, 5).

"""

diff --git a/ultralytics/utils/metrics.py b/ultralytics/utils/metrics.py

index f10ae329..7a3e9d32 100644

--- a/ultralytics/utils/metrics.py

+++ b/ultralytics/utils/metrics.py

@@ -180,7 +180,7 @@ def _get_covariance_matrix(boxes):

Returns:

(torch.Tensor): Covariance metrixs corresponding to original rotated bounding boxes.

"""

- # Gaussian bounding boxes, ignored the center points(the first two columns) cause it's not needed here.

+ # Gaussian bounding boxes, ignore the center points (the first two columns) because they are not needed here.

gbbs = torch.cat((torch.pow(boxes[:, 2:4], 2) / 12, boxes[:, 4:]), dim=-1)

a, b, c = gbbs.split(1, dim=-1)

return (

diff --git a/ultralytics/utils/ops.py b/ultralytics/utils/ops.py

index 5f02dabc..c569a3cb 100644

--- a/ultralytics/utils/ops.py

+++ b/ultralytics/utils/ops.py

@@ -75,7 +75,6 @@ def segment2box(segment, width=640, height=640):

Returns:

(np.ndarray): the minimum and maximum x and y values of the segment.

"""

- # Convert 1 segment label to 1 box label, applying inside-image constraint, i.e. (xy1, xy2, ...) to (xyxy)

x, y = segment.T # segment xy

inside = (x >= 0) & (y >= 0) & (x <= width) & (y <= height)

x = x[inside]

diff --git a/ultralytics/utils/plotting.py b/ultralytics/utils/plotting.py

index 3028ed14..629de701 100644

--- a/ultralytics/utils/plotting.py

+++ b/ultralytics/utils/plotting.py

@@ -388,6 +388,7 @@ class Annotator:

a (float) : The value of pose point a

b (float): The value of pose point b

c (float): The value o pose point c

+

Returns:

angle (degree): Degree value of angle between three points

"""

diff --git a/ultralytics/utils/triton.py b/ultralytics/utils/triton.py

index 98c4be20..3f873a6f 100644

--- a/ultralytics/utils/triton.py

+++ b/ultralytics/utils/triton.py

@@ -75,7 +75,7 @@ class TritonRemoteModel:

*inputs (List[np.ndarray]): Input data to the model.

Returns:

- List[np.ndarray]: Model outputs.

+ (List[np.ndarray]): Model outputs.

"""

infer_inputs = []

input_format = inputs[0].dtype

diff --git a/ultralytics/utils/tuner.py b/ultralytics/utils/tuner.py

index db7d4907..20e3b756 100644

--- a/ultralytics/utils/tuner.py

+++ b/ultralytics/utils/tuner.py

@@ -94,7 +94,7 @@ def run_ray_tune(

config (dict): A dictionary of hyperparameters to use for training.

Returns:

- None.

+ None

"""

model_to_train = ray.get(model_in_store) # get the model from ray store for tuning

model_to_train.reset_callbacks()

+

+