mirror of

https://github.com/THU-MIG/yolov10.git

synced 2025-12-06 16:06:12 +08:00

Add instance segmentation and vision-eye mapping in Docs + Fix minor code bug in other real-world-projects (#6972)

Co-authored-by: pre-commit-ci[bot] <66853113+pre-commit-ci[bot]@users.noreply.github.com> Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

e9def85f1f

commit

34b10b2db3

@ -42,17 +42,43 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

||||

# Heatmap Init

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_CIVIDIS,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

imw=cap.get(4), # should same as cap width

|

||||

imh=cap.get(3), # should same as cap height

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

results = model.track(im0, persist=True)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "Heatmap with im0"

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.solutions import heatmap

|

||||

import cv2

|

||||

|

||||

model = YOLO("yolov8s.pt") # YOLOv8 custom/pretrained model

|

||||

|

||||

im0 = cv2.imread("path/to/image.png") # path to image file

|

||||

|

||||

# Heatmap Init

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_JET,

|

||||

imw=im0.shape[0], # should same as im0 width

|

||||

imh=im0.shape[1], # should same as im0 height

|

||||

view_img=True)

|

||||

|

||||

|

||||

results = model.track(im0, persist=True)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

cv2.imwrite("ultralytics_output.png", im0)

|

||||

```

|

||||

|

||||

=== "Heatmap with Specific Classes"

|

||||

@ -70,17 +96,19 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

||||

# Heatmap init

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_CIVIDIS,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

imw=cap.get(4), # should same as cap width

|

||||

imh=cap.get(3), # should same as cap height

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

results = model.track(im0, persist=True, classes=classes_for_heatmap)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "Heatmap with Save Output"

|

||||

@ -102,18 +130,21 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

||||

# Heatmap init

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_CIVIDIS,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

imw=cap.get(4), # should same as cap width

|

||||

imh=cap.get(3), # should same as cap height

|

||||

view_img=True)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

results = model.track(im0, persist=True, classes=classes_for_heatmap)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

results = model.track(im0, persist=True)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

video_writer.write(im0)

|

||||

|

||||

video_writer.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "Heatmap with Object Counting"

|

||||

@ -133,17 +164,21 @@ A heatmap generated with [Ultralytics YOLOv8](https://github.com/ultralytics/ult

|

||||

# Heatmap Init

|

||||

heatmap_obj = heatmap.Heatmap()

|

||||

heatmap_obj.set_args(colormap=cv2.COLORMAP_JET,

|

||||

imw=cap.get(4), # should same as im0 width

|

||||

imh=cap.get(3), # should same as im0 height

|

||||

imw=cap.get(4), # should same as cap width

|

||||

imh=cap.get(3), # should same as cap height

|

||||

view_img=True,

|

||||

count_reg_pts=count_reg_pts)

|

||||

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

results = model.track(im0, persist=True)

|

||||

im0 = heatmap_obj.generate_heatmap(im0, tracks=results)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

|

||||

```

|

||||

|

||||

### Arguments `set_args`

|

||||

|

||||

@ -35,6 +35,8 @@ Here's a compilation of in-depth guides to help you master different aspects of

|

||||

* [Objects Counting in Regions](region-counting.md) 🚀 NEW: Explore counting objects in specific regions with Ultralytics YOLOv8 for precise and efficient object detection in varied areas.

|

||||

* [Security Alarm System](security-alarm-system.md) 🚀 NEW: Discover the process of creating a security alarm system with Ultralytics YOLOv8. This system triggers alerts upon detecting new objects in the frame. Subsequently, you can customize the code to align with your specific use case.

|

||||

* [Heatmaps](heatmaps.md) 🚀 NEW: Elevate your understanding of data with our Detection Heatmaps! These intuitive visual tools use vibrant color gradients to vividly illustrate the intensity of data values across a matrix. Essential in computer vision, heatmaps are skillfully designed to highlight areas of interest, providing an immediate, impactful way to interpret spatial information.

|

||||

* [Instance Segmentation with Object Tracking](instance-segmentation-and-tracking.md) 🚀 NEW: Explore our feature on Object Segmentation in Bounding Boxes Shape, providing a visual representation of precise object boundaries for enhanced understanding and analysis.

|

||||

* [VisionEye View Objects Mapping](vision-eye.md) 🚀 NEW: This feature aim computers to discern and focus on specific objects, much like the way the human eye observes details from a particular viewpoint.

|

||||

|

||||

## Contribute to Our Guides

|

||||

|

||||

|

||||

127

docs/en/guides/instance-segmentation-and-tracking.md

Normal file

127

docs/en/guides/instance-segmentation-and-tracking.md

Normal file

@ -0,0 +1,127 @@

|

||||

---

|

||||

comments: true

|

||||

description: Instance Segmentation with Object Tracking using Ultralytics YOLOv8

|

||||

keywords: Ultralytics, YOLOv8, Instance Segmentation, Object Detection, Object Tracking, Segbbox, Computer Vision, Notebook, IPython Kernel, CLI, Python SDK

|

||||

---

|

||||

|

||||

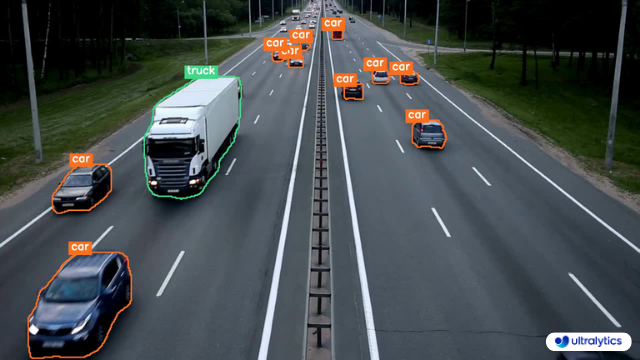

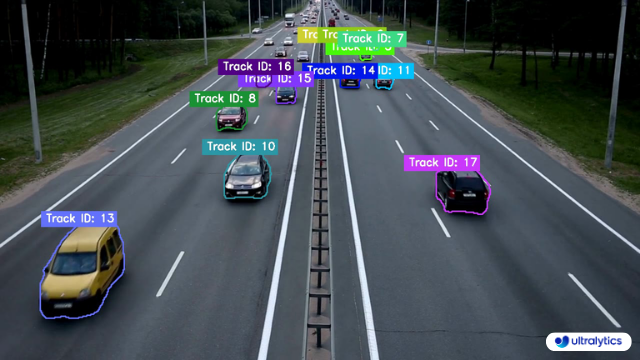

# Instance Segmentation and Tracking using Ultralytics YOLOv8 🚀

|

||||

|

||||

## What is Instance Segmentation?

|

||||

|

||||

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics/) Instance segmentation involves identifying and outlining individual objects in an image, providing a detailed understanding of spatial distribution. Unlike semantic segmentation, it uniquely labels and precisely delineates each object, crucial for tasks like object detection and medical imaging.

|

||||

Two Types of instance segmentation by Ultralytics YOLOv8.

|

||||

|

||||

- **Instance Segmentation with Class Objects:** Each class object is assigned a unique color for clear visual separation.

|

||||

|

||||

- **Instance Segmentation with Object Tracks:** Every track is represented by a distinct color, facilitating easy identification and tracking.

|

||||

|

||||

## Samples

|

||||

|

||||

| Instance Segmentation | Instance Segmentation + Object Tracking |

|

||||

|:---------------------------------------------------------------------------------------------------------------------------------------:|:------------------------------------------------------------------------------------------------------------------------------------------------------------:|

|

||||

|  |  |

|

||||

| Ultralytics Instance Segmentation 😍 | Ultralytics Instance Segmentation with Object Tracking 🔥 |

|

||||

|

||||

|

||||

!!! Example "Instance Segmentation and Tracking"

|

||||

|

||||

=== "Instance Segmentation"

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.utils.plotting import Annotator, colors

|

||||

|

||||

model = YOLO("yolov8n-seg.pt")

|

||||

names = model.model.names

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

||||

out = cv2.VideoWriter('instance-segmentation.avi',

|

||||

cv2.VideoWriter_fourcc(*'MJPG'),

|

||||

30, (int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

while True:

|

||||

ret, im0 = cap.read()

|

||||

if not ret:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

results = model.predict(im0)

|

||||

clss = results[0].boxes.cls.cpu().tolist()

|

||||

masks = results[0].masks.xy

|

||||

|

||||

annotator = Annotator(im0, line_width=2)

|

||||

|

||||

for mask, cls in zip(masks, clss):

|

||||

annotator.seg_bbox(mask=mask,

|

||||

mask_color=colors(int(cls), True),

|

||||

det_label=names[int(cls)])

|

||||

|

||||

out.write(im0)

|

||||

cv2.imshow("instance-segmentation", im0)

|

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'):

|

||||

break

|

||||

|

||||

out.release()

|

||||

cap.release()

|

||||

cv2.destroyAllWindows()

|

||||

|

||||

```

|

||||

|

||||

=== "Instance Segmentation with Object Tracking"

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.utils.plotting import Annotator, colors

|

||||

|

||||

from collections import defaultdict

|

||||

|

||||

track_history = defaultdict(lambda: [])

|

||||

|

||||

model = YOLO("yolov8n-seg.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

||||

out = cv2.VideoWriter('instance-segmentation-object-tracking.avi',

|

||||

cv2.VideoWriter_fourcc(*'MJPG'),

|

||||

30, (int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

while True:

|

||||

ret, im0 = cap.read()

|

||||

if not ret:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

results = model.track(im0, persist=True)

|

||||

masks = results[0].masks.xy

|

||||

track_ids = results[0].boxes.id.int().cpu().tolist()

|

||||

|

||||

annotator = Annotator(im0, line_width=2)

|

||||

|

||||

for mask, track_id in zip(masks, track_ids):

|

||||

annotator.seg_bbox(mask=mask,

|

||||

mask_color=colors(track_id, True),

|

||||

track_label=str(track_id))

|

||||

|

||||

out.write(im0)

|

||||

cv2.imshow("instance-segmentation-object-tracking", im0)

|

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'):

|

||||

break

|

||||

|

||||

out.release()

|

||||

cap.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

### `seg_bbox` Arguments

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|---------------|---------|-----------------|----------------------------------------|

|

||||

| `mask` | `array` | `None` | Segmentation mask coordinates |

|

||||

| `mask_color` | `tuple` | `(255, 0, 255)` | Mask color for every segmented box |

|

||||

| `det_label` | `str` | `None` | Label for segmented object |

|

||||

| `track_label` | `str` | `None` | Label for segmented and tracked object |

|

||||

|

||||

## Note

|

||||

|

||||

For any inquiries, feel free to post your questions in the [Ultralytics Issue Section](https://github.com/ultralytics/ultralytics/issues/new/choose) or the discussion section mentioned below.

|

||||

@ -10,6 +10,17 @@ keywords: Ultralytics, YOLOv8, Object Detection, Object Counting, Object Trackin

|

||||

|

||||

Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics/) involves accurate identification and counting of specific objects in videos and camera streams. YOLOv8 excels in real-time applications, providing efficient and precise object counting for various scenarios like crowd analysis and surveillance, thanks to its state-of-the-art algorithms and deep learning capabilities.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe width="720" height="405" src="https://www.youtube.com/embed/Ag2e-5_NpS0"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> Object Counting using Ultralytics YOLOv8

|

||||

</p>

|

||||

|

||||

## Advantages of Object Counting?

|

||||

|

||||

- **Resource Optimization:** Object counting facilitates efficient resource management by providing accurate counts, and optimizing resource allocation in applications like inventory management.

|

||||

@ -45,9 +56,12 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "Object Counting with Specific Classes"

|

||||

@ -71,11 +85,13 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

tracks = model.track(im0, persist=True,

|

||||

show=False,

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False,

|

||||

classes=classes_to_count)

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "Object Counting with Save Output"

|

||||

@ -103,12 +119,14 @@ Object counting with [Ultralytics YOLOv8](https://github.com/ultralytics/ultraly

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

tracks = model.track(im0, persist=True, show=False)

|

||||

im0 = counter.start_counting(im0, tracks)

|

||||

video_writer.write(im0)

|

||||

|

||||

video_writer.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

???+ tip "Region is Movable"

|

||||

|

||||

127

docs/en/guides/vision-eye.md

Normal file

127

docs/en/guides/vision-eye.md

Normal file

@ -0,0 +1,127 @@

|

||||

---

|

||||

comments: true

|

||||

description: VisionEye View Object Mapping using Ultralytics YOLOv8

|

||||

keywords: Ultralytics, YOLOv8, Object Detection, Object Tracking, IDetection, VisionEye, Computer Vision, Notebook, IPython Kernel, CLI, Python SDK

|

||||

---

|

||||

|

||||

# VisionEye View Object Mapping using Ultralytics YOLOv8 🚀

|

||||

|

||||

## What is VisionEye Object Mapping?

|

||||

|

||||

[Ultralytics YOLOv8](https://github.com/ultralytics/ultralytics/) VisionEye offers the capability for computers to identify and pinpoint objects, simulating the observational precision of the human eye. This functionality enables computers to discern and focus on specific objects, much like the way the human eye observes details from a particular viewpoint.

|

||||

|

||||

<p align="center">

|

||||

<br>

|

||||

<iframe width="720" height="405" src="https://www.youtube.com/embed/in6xF7KgF7Q"

|

||||

title="YouTube video player" frameborder="0"

|

||||

allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share"

|

||||

allowfullscreen>

|

||||

</iframe>

|

||||

<br>

|

||||

<strong>Watch:</strong> VisionEye Mapping using Ultralytics YOLOv8

|

||||

</p>

|

||||

|

||||

## Samples

|

||||

| VisionEye View | VisionEye View With Object Tracking |

|

||||

|:------------------------------------------------------------------------------------------------------------------------------------------------------------:|:---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------:|

|

||||

|  |  |

|

||||

| VisionEye View Object Mapping using Ultralytics YOLOv8 | VisionEye View Object Mapping with Object Tracking using Ultralytics YOLOv8 |

|

||||

|

||||

|

||||

!!! Example "VisionEye Object Mapping using YOLOv8"

|

||||

|

||||

=== "VisionEye Object Mapping"

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.utils.plotting import colors, Annotator

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

names = model.model.names

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

||||

out = cv2.VideoWriter('visioneye-pinpoint.avi', cv2.VideoWriter_fourcc(*'MJPG'),

|

||||

30, (int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

center_point = (-10, int(cap.get(4)))

|

||||

|

||||

while True:

|

||||

ret, im0 = cap.read()

|

||||

if not ret:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

results = model.predict(im0)

|

||||

boxes = results[0].boxes.xyxy.cpu()

|

||||

clss = results[0].boxes.cls.cpu().tolist()

|

||||

|

||||

annotator = Annotator(im0, line_width=2)

|

||||

|

||||

for box, cls in zip(boxes, clss):

|

||||

annotator.box_label(box, label=names[int(cls)], color=colors(int(cls)))

|

||||

annotator.visioneye(box, center_point)

|

||||

|

||||

out.write(im0)

|

||||

cv2.imshow("visioneye-pinpoint", im0)

|

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'):

|

||||

break

|

||||

|

||||

out.release()

|

||||

cap.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "VisionEye Object Mapping with Object Tracking"

|

||||

```python

|

||||

import cv2

|

||||

from ultralytics import YOLO

|

||||

from ultralytics.utils.plotting import colors, Annotator

|

||||

|

||||

model = YOLO("yolov8n.pt")

|

||||

cap = cv2.VideoCapture("path/to/video/file.mp4")

|

||||

|

||||

out = cv2.VideoWriter('visioneye-pinpoint.avi', cv2.VideoWriter_fourcc(*'MJPG'),

|

||||

30, (int(cap.get(3)), int(cap.get(4))))

|

||||

|

||||

center_point = (-10, int(cap.get(4)))

|

||||

|

||||

while True:

|

||||

ret, im0 = cap.read()

|

||||

if not ret:

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

|

||||

results = model.track(im0, persist=True)

|

||||

boxes = results[0].boxes.xyxy.cpu()

|

||||

track_ids = results[0].boxes.id.int().cpu().tolist()

|

||||

|

||||

annotator = Annotator(im0, line_width=2)

|

||||

|

||||

for box, track_id in zip(boxes, track_ids):

|

||||

annotator.box_label(box, label=str(track_id), color=colors(int(track_id)))

|

||||

annotator.visioneye(box, center_point)

|

||||

|

||||

out.write(im0)

|

||||

cv2.imshow("visioneye-pinpoint", im0)

|

||||

|

||||

if cv2.waitKey(1) & 0xFF == ord('q'):

|

||||

break

|

||||

|

||||

out.release()

|

||||

cap.release()

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

### `visioneye` Arguments

|

||||

|

||||

| Name | Type | Default | Description |

|

||||

|---------------|---------|------------------|--------------------------------------------------|

|

||||

| `color` | `tuple` | `(235, 219, 11)` | Line and object centroid color |

|

||||

| `pin_color` | `tuple` | `(255, 0, 255)` | VisionEye pinpoint color |

|

||||

| `thickness` | `int` | `2` | pinpoint to object line thickness |

|

||||

| `pins_radius` | `int` | `10` | Pinpoint and object centroid point circle radius |

|

||||

|

||||

## Note

|

||||

|

||||

For any inquiries, feel free to post your questions in the [Ultralytics Issue Section](https://github.com/ultralytics/ultralytics/issues/new/choose) or the discussion section mentioned below.

|

||||

@ -45,10 +45,13 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

frame_count += 1

|

||||

results = model.predict(im0, verbose=False)

|

||||

im0 = gym_object.start_counting(im0, results, frame_count)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

```

|

||||

|

||||

=== "Workouts Monitoring with Save Output"

|

||||

@ -76,12 +79,14 @@ Monitoring workouts through pose estimation with [Ultralytics YOLOv8](https://gi

|

||||

while cap.isOpened():

|

||||

success, im0 = cap.read()

|

||||

if not success:

|

||||

exit(0)

|

||||

print("Video frame is empty or video processing has been successfully completed.")

|

||||

break

|

||||

frame_count += 1

|

||||

results = model.predict(im0, verbose=False)

|

||||

im0 = gym_object.start_counting(im0, results, frame_count)

|

||||

video_writer.write(im0)

|

||||

|

||||

cv2.destroyAllWindows()

|

||||

video_writer.release()

|

||||

```

|

||||

|

||||

|

||||

@ -271,6 +271,8 @@ nav:

|

||||

- Objects Counting in Regions: guides/region-counting.md

|

||||

- Security Alarm System: guides/security-alarm-system.md

|

||||

- Heatmaps: guides/heatmaps.md

|

||||

- Instance Segmentation with Object Tracking: guides/instance-segmentation-and-tracking.md

|

||||

- VisionEye Mapping: guides/vision-eye.md

|

||||

- Integrations:

|

||||

- integrations/index.md

|

||||

- Comet ML: integrations/comet.md

|

||||

|

||||

@ -62,7 +62,7 @@ class AIGym:

|

||||

|

||||

def start_counting(self, im0, results, frame_count):

|

||||

"""

|

||||

function used to count the gym steps

|

||||

Function used to count the gym steps

|

||||

Args:

|

||||

im0 (ndarray): Current frame from the video stream.

|

||||

results: Pose estimation data

|

||||

|

||||

@ -139,10 +139,11 @@ class ObjectCounter:

|

||||

else:

|

||||

self.in_counts += 1

|

||||

|

||||

if self.env_check and self.view_img:

|

||||

incount_label = 'InCount : ' + f'{self.in_counts}'

|

||||

outcount_label = 'OutCount : ' + f'{self.out_counts}'

|

||||

self.annotator.count_labels(in_count=incount_label, out_count=outcount_label)

|

||||

|

||||

if self.env_check and self.view_img:

|

||||

cv2.namedWindow('Ultralytics YOLOv8 Object Counter')

|

||||

cv2.setMouseCallback('Ultralytics YOLOv8 Object Counter', self.mouse_event_for_region,

|

||||

{'region_points': self.reg_pts})

|

||||

|

||||

@ -345,20 +345,17 @@ class Annotator:

|

||||

font_scale = 0.6 + (line_thickness / 10.0)

|

||||

|

||||

# Draw angle

|

||||

(angle_text_width, angle_text_height), _ = cv2.getTextSize(angle_text, cv2.FONT_HERSHEY_SIMPLEX, font_scale,

|

||||

line_thickness)

|

||||

(angle_text_width, angle_text_height), _ = cv2.getTextSize(angle_text, 0, font_scale, line_thickness)

|

||||

angle_text_position = (int(center_kpt[0]), int(center_kpt[1]))

|

||||

angle_background_position = (angle_text_position[0], angle_text_position[1] - angle_text_height - 5)

|

||||

angle_background_size = (angle_text_width + 2 * 5, angle_text_height + 2 * 5 + (line_thickness * 2))

|

||||

cv2.rectangle(self.im, angle_background_position, (angle_background_position[0] + angle_background_size[0],

|

||||

angle_background_position[1] + angle_background_size[1]),

|

||||

(255, 255, 255), -1)

|

||||

cv2.putText(self.im, angle_text, angle_text_position, cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 0),

|

||||

line_thickness)

|

||||

cv2.putText(self.im, angle_text, angle_text_position, 0, font_scale, (0, 0, 0), line_thickness)

|

||||

|

||||

# Draw Counts

|

||||

(count_text_width, count_text_height), _ = cv2.getTextSize(count_text, cv2.FONT_HERSHEY_SIMPLEX, font_scale,

|

||||

line_thickness)

|

||||

(count_text_width, count_text_height), _ = cv2.getTextSize(count_text, 0, font_scale, line_thickness)

|

||||

count_text_position = (angle_text_position[0], angle_text_position[1] + angle_text_height + 20)

|

||||

count_background_position = (angle_background_position[0],

|

||||

angle_background_position[1] + angle_background_size[1] + 5)

|

||||

@ -367,12 +364,10 @@ class Annotator:

|

||||

cv2.rectangle(self.im, count_background_position, (count_background_position[0] + count_background_size[0],

|

||||

count_background_position[1] + count_background_size[1]),

|

||||

(255, 255, 255), -1)

|

||||

cv2.putText(self.im, count_text, count_text_position, cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 0),

|

||||

line_thickness)

|

||||

cv2.putText(self.im, count_text, count_text_position, 0, font_scale, (0, 0, 0), line_thickness)

|

||||

|

||||

# Draw Stage

|

||||

(stage_text_width, stage_text_height), _ = cv2.getTextSize(stage_text, cv2.FONT_HERSHEY_SIMPLEX, font_scale,

|

||||

line_thickness)

|

||||

(stage_text_width, stage_text_height), _ = cv2.getTextSize(stage_text, 0, font_scale, line_thickness)

|

||||

stage_text_position = (int(center_kpt[0]), int(center_kpt[1]) + angle_text_height + count_text_height + 40)

|

||||

stage_background_position = (stage_text_position[0], stage_text_position[1] - stage_text_height - 5)

|

||||

stage_background_size = (stage_text_width + 10, stage_text_height + 10)

|

||||

@ -380,8 +375,25 @@ class Annotator:

|

||||

cv2.rectangle(self.im, stage_background_position, (stage_background_position[0] + stage_background_size[0],

|

||||

stage_background_position[1] + stage_background_size[1]),

|

||||

(255, 255, 255), -1)

|

||||

cv2.putText(self.im, stage_text, stage_text_position, cv2.FONT_HERSHEY_SIMPLEX, font_scale, (0, 0, 0),

|

||||

line_thickness)

|

||||

cv2.putText(self.im, stage_text, stage_text_position, 0, font_scale, (0, 0, 0), line_thickness)

|

||||

|

||||

def seg_bbox(self, mask, mask_color=(255, 0, 255), det_label=None, track_label=None):

|

||||

"""Function for drawing segmented object in bounding box shape."""

|

||||

cv2.polylines(self.im, [np.int32([mask])], isClosed=True, color=mask_color, thickness=2)

|

||||

|

||||

label = f'Track ID: {track_label}' if track_label else det_label

|

||||

text_size, _ = cv2.getTextSize(label, 0, 0.7, 1)

|

||||

cv2.rectangle(self.im, (int(mask[0][0]) - text_size[0] // 2 - 10, int(mask[0][1]) - text_size[1] - 10),

|

||||

(int(mask[0][0]) + text_size[0] // 2 + 5, int(mask[0][1] + 5)), mask_color, -1)

|

||||

cv2.putText(self.im, label, (int(mask[0][0]) - text_size[0] // 2, int(mask[0][1]) - 5), 0, 0.7, (255, 255, 255),

|

||||

2)

|

||||

|

||||

def visioneye(self, box, center_point, color=(235, 219, 11), pin_color=(255, 0, 255), thickness=2, pins_radius=10):

|

||||

"""Function for pinpoint human-vision eye mapping and plotting."""

|

||||

center_bbox = int((box[0] + box[2]) / 2), int((box[1] + box[3]) / 2)

|

||||

cv2.circle(self.im, center_point, pins_radius, pin_color, -1)

|

||||

cv2.circle(self.im, center_bbox, pins_radius, color, -1)

|

||||

cv2.line(self.im, center_point, center_bbox, color, thickness)

|

||||

|

||||

|

||||

@TryExcept() # known issue https://github.com/ultralytics/yolov5/issues/5395

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user