mirror of

https://github.com/THU-MIG/yolov10.git

synced 2025-12-24 05:45:39 +08:00

Add AzureML Quickstart Guides (#4772)

Co-authored-by: Glenn Jocher <glenn.jocher@ultralytics.com>

This commit is contained in:

parent

8fd9a1a048

commit

602022a56e

122

docs/guides/azureml-quickstart.md

Normal file

122

docs/guides/azureml-quickstart.md

Normal file

@ -0,0 +1,122 @@

|

||||

---

|

||||

comments: true

|

||||

description: Azure Machine Learning YOLOv8 quickstart

|

||||

keywords: Ultralytics, YOLO, Deep Learning, Object detection, quickstart, Azure, AzureML

|

||||

---

|

||||

|

||||

# YOLOv8 🚀 on AzureML

|

||||

|

||||

Note that this guide is only for quick trials from a compute terminal or from a Notebook. If you want to unlock the full power AzureML, you can find the documentation to:

|

||||

|

||||

- [Create a data asset](https://learn.microsoft.com/azure/machine-learning/how-to-create-data-assets)

|

||||

- [Create an AzureML job](https://learn.microsoft.com/azure/machine-learning/how-to-train-model)

|

||||

- [Register a model](https://learn.microsoft.com/azure/machine-learning/how-to-manage-models)

|

||||

- [Train YOLOv8 with the AzureML Python SDK](https://medium.com/@ouphi/how-to-train-the-yolov8-model-with-azure-machine-learning-python-sdk-8268696be8ba)

|

||||

- [Train YOLOv8 with the Azureml cli](https://medium.com/@ouphi/how-to-train-the-yolov8-model-with-azureml-and-the-az-cli-73d3c870ba8e)

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You need an [AzureML workspace](https://learn.microsoft.com/azure/machine-learning/concept-workspace?view=azureml-api-2).

|

||||

|

||||

## Create a compute instance

|

||||

|

||||

From your AzureML workspace, select Compute > Compute instances > New, select the instance with the resources you need.

|

||||

|

||||

<img width="1741" alt="create-compute-arrow" src="https://github.com/ouphi/ultralytics/assets/17216799/3e92fcc0-a08e-41a4-af81-d289cfe3b8f2">

|

||||

|

||||

## Quickstart from Terminal

|

||||

|

||||

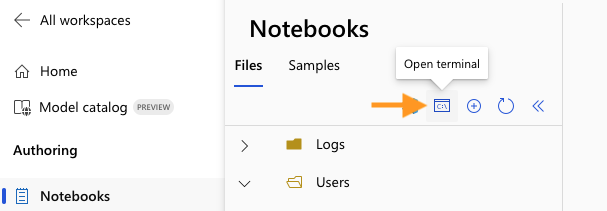

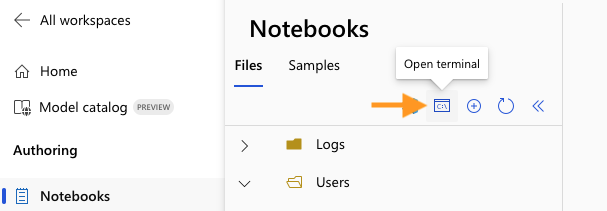

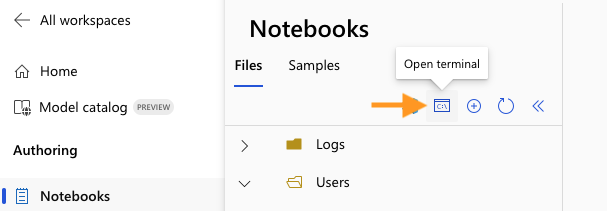

Start your compute and open a Terminal:

|

||||

|

||||

|

||||

|

||||

### Create virtualenv

|

||||

|

||||

Create your conda virtualenv and install pip in it:

|

||||

|

||||

```bash

|

||||

conda create --name yolov8env -y

|

||||

conda activate yolov8env

|

||||

conda install pip -y

|

||||

```

|

||||

|

||||

Install the required dependencies:

|

||||

|

||||

```bash

|

||||

cd ultralytics

|

||||

pip install -r requirements.txt

|

||||

pip install ultralytics

|

||||

pip install onnx>=1.12.0

|

||||

```

|

||||

|

||||

### Perform YOLOv8 tasks

|

||||

|

||||

Predict:

|

||||

|

||||

```bash

|

||||

yolo predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg'

|

||||

```

|

||||

|

||||

Train a detection model for 10 epochs with an initial learning_rate of 0.01:

|

||||

|

||||

```bash

|

||||

yolo train data=coco128.yaml model=yolov8n.pt epochs=10 lr0=0.01

|

||||

```

|

||||

|

||||

You can find more [instructions to use the Ultralytics cli here](https://docs.ultralytics.com/quickstart/#use-ultralytics-with-cli).

|

||||

|

||||

## Quickstart from a Notebook

|

||||

|

||||

### Create a new IPython kernel

|

||||

|

||||

Open the compute Terminal.

|

||||

|

||||

|

||||

|

||||

From your compute terminal, you need to create a new ipykernel that will be used by your notebook to manage your dependencies:

|

||||

|

||||

```bash

|

||||

conda create --name yolov8env -y

|

||||

conda activate yolov8env

|

||||

conda install pip -y

|

||||

conda install ipykernel -y

|

||||

python -m ipykernel install --user --name yolov8env --display-name "yolov8env"

|

||||

```

|

||||

|

||||

Close your terminal and create a new notebook. From your Notebook, you can select the new kernel.

|

||||

|

||||

Then you can open a Notebook cell and install the required dependencies:

|

||||

|

||||

```bash

|

||||

%%bash

|

||||

source activate yolov8env

|

||||

cd ultralytics

|

||||

pip install -r requirements.txt

|

||||

pip install ultralytics

|

||||

pip install onnx>=1.12.0

|

||||

```

|

||||

|

||||

Note that we need to use the `source activate yolov8env` for all the %%bash cells, to make sure that the %%bash cell uses environment we want.

|

||||

|

||||

Run some predictions using the [Ultralytics CLI](https://docs.ultralytics.com/quickstart/#use-ultralytics-with-cli):

|

||||

|

||||

```bash

|

||||

%%bash

|

||||

source activate yolov8env

|

||||

yolo predict model=yolov8n.pt source='https://ultralytics.com/images/bus.jpg'

|

||||

```

|

||||

|

||||

Or with the [Ultralytics Python interface](https://docs.ultralytics.com/quickstart/#use-ultralytics-with-python), for example to train the model:

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

# Load a model

|

||||

model = YOLO("yolov8n.pt") # load an official YOLOv8n model

|

||||

|

||||

# Use the model

|

||||

model.train(data="coco128.yaml", epochs=3) # train the model

|

||||

metrics = model.val() # evaluate model performance on the validation set

|

||||

results = model("https://ultralytics.com/images/bus.jpg") # predict on an image

|

||||

path = model.export(format="onnx") # export the model to ONNX format

|

||||

```

|

||||

@ -17,6 +17,7 @@ Here's a compilation of in-depth guides to help you master different aspects of

|

||||

* [K-Fold Cross Validation](kfold-cross-validation.md) 🚀 NEW: Learn how to improve model generalization using K-Fold cross-validation technique.

|

||||

* [Hyperparameter Tuning](hyperparameter-tuning.md) 🚀 NEW: Discover how to optimize your YOLO models by fine-tuning hyperparameters using the Tuner class and genetic evolution algorithms.

|

||||

* [Using YOLOv8 with SAHI for Sliced Inference](sahi-tiled-inference.md) 🚀 NEW: Comprehensive guide on leveraging SAHI's sliced inference capabilities with YOLOv8 for object detection in high-resolution images.

|

||||

* [AzureML Quickstart](azureml-quickstart.md) 🚀 NEW: Get up and running with Ultralytics YOLO models on Microsoft's Azure Machine Learning platform. Learn how to train, deploy, and scale your object detection projects in the cloud.

|

||||

|

||||

## Contribute to Our Guides

|

||||

|

||||

@ -24,4 +25,4 @@ We welcome contributions from the community! If you've mastered a particular asp

|

||||

|

||||

To get started, please read our [Contributing Guide](https://docs.ultralytics.com/help/contributing) for guidelines on how to open up a Pull Request (PR) 🛠️. We look forward to your contributions!

|

||||

|

||||

Let's work together to make the Ultralytics YOLO ecosystem more robust and versatile 🙏!

|

||||

Let's work together to make the Ultralytics YOLO ecosystem more robust and versatile 🙏!

|

||||

|

||||

95

docs/yolov5/environments/azureml_quickstart_tutorial.md

Normal file

95

docs/yolov5/environments/azureml_quickstart_tutorial.md

Normal file

@ -0,0 +1,95 @@

|

||||

---

|

||||

comments: true

|

||||

description: Azure Machine Learning YOLOv5 quickstart

|

||||

keywords: Ultralytics, YOLO, Deep Learning, Object detection, quickstart, Azure, AzureML

|

||||

---

|

||||

|

||||

# YOLOv5 🚀 on AzureML

|

||||

|

||||

This guide provides a quickstart to use YOLOv5 from an AzureML compute instance.

|

||||

|

||||

Note that this guide is a quickstart for quick trials. If you want to unlock the full power AzureML, you can find the documentation to:

|

||||

|

||||

- [Create a data asset](https://learn.microsoft.com/azure/machine-learning/how-to-create-data-assets)

|

||||

- [Create an AzureML job](https://learn.microsoft.com/azure/machine-learning/how-to-train-model)

|

||||

- [Register a model](https://learn.microsoft.com/azure/machine-learning/how-to-manage-models)

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You need an [AzureML workspace](https://learn.microsoft.com/azure/machine-learning/concept-workspace?view=azureml-api-2).

|

||||

|

||||

## Create a compute instance

|

||||

|

||||

From your AzureML workspace, select Compute > Compute instances > New, select the instance with the resources you need.

|

||||

|

||||

<img width="1741" alt="create-compute-arrow" src="https://github.com/ouphi/ultralytics/assets/17216799/3e92fcc0-a08e-41a4-af81-d289cfe3b8f2">

|

||||

|

||||

## Open a Terminal

|

||||

|

||||

Now from the Notebooks view, open a Terminal and select your compute.

|

||||

|

||||

|

||||

|

||||

## Setup and run YOLOv5

|

||||

|

||||

Now you can, create a virtual environment:

|

||||

|

||||

```bash

|

||||

conda create --name yolov5env -y

|

||||

conda activate yolov5env

|

||||

conda install pip -y

|

||||

```

|

||||

|

||||

Clone YOLOv5 repository with its submodules:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/ultralytics/yolov5

|

||||

cd yolov5

|

||||

git submodule update --init --recursive # Note that you might have a message asking you to add your folder as a safe.directory just copy the recommended command

|

||||

```

|

||||

|

||||

Install the required dependencies:

|

||||

|

||||

```bash

|

||||

pip install -r yolov5/requirements.txt

|

||||

pip install onnx>=1.10.0

|

||||

```

|

||||

|

||||

Train the YOLOv5 model:

|

||||

|

||||

```bash

|

||||

python train.py

|

||||

```

|

||||

|

||||

Validate the model for Precision, Recall, and mAP

|

||||

|

||||

```bash

|

||||

python val.py --weights yolov5s.pt

|

||||

```

|

||||

|

||||

Run inference on images and videos:

|

||||

|

||||

```bash

|

||||

python detect.py --weights yolov5s.pt --source path/to/images

|

||||

```

|

||||

|

||||

Export models to other formats:

|

||||

|

||||

```bash

|

||||

python detect.py --weights yolov5s.pt --source path/to/images

|

||||

```

|

||||

|

||||

## Notes on using a notebook

|

||||

|

||||

Note that if you want to run these commands from a Notebook, you need to [create a new Kernel](https://learn.microsoft.com/en-us/azure/machine-learning/how-to-access-terminal?view=azureml-api-2#add-new-kernels)

|

||||

and select your new Kernel on the top of your Notebook.

|

||||

|

||||

If you create Python cells it will automatically use your custom environment, but if you add bash cells, you will need to run `source activate <your-env>` on each of these cells to make sure it uses your custom environment.

|

||||

|

||||

For example:

|

||||

|

||||

```bash

|

||||

%%bash

|

||||

source activate newenv

|

||||

python val.py --weights yolov5s.pt

|

||||

```

|

||||

@ -57,6 +57,7 @@ YOLOv5 is designed to be run in the following up-to-date, verified environments,

|

||||

GPU: <a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a> <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a> <a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

|

||||

- **Google Cloud** Deep Learning VM. See [GCP Quickstart Guide](environments/google_cloud_quickstart_tutorial.md)

|

||||

- **Amazon** Deep Learning AMI. See [AWS Quickstart Guide](environments/aws_quickstart_tutorial.md)

|

||||

- **Azure** Azure Machine Learning. See [AzureML Quickstart Guide](environments/azureml_quickstart_tutorial.md)

|

||||

- **Docker Image**. See [Docker Quickstart Guide](environments/docker_image_quickstart_tutorial.md) <a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

|

||||

|

||||

## Status

|

||||

|

||||

@ -217,6 +217,7 @@ nav:

|

||||

- K-Fold Cross Validation: guides/kfold-cross-validation.md

|

||||

- Hyperparameter Tuning: guides/hyperparameter-tuning.md

|

||||

- SAHI Tiled Inference: guides/sahi-tiled-inference.md

|

||||

- AzureML Quickstart: guides/azureml-quickstart.md

|

||||

- Integrations:

|

||||

- integrations/index.md

|

||||

- OpenVINO: integrations/openvino.md

|

||||

@ -234,6 +235,7 @@ nav:

|

||||

- Environments:

|

||||

- Amazon Web Services (AWS): yolov5/environments/aws_quickstart_tutorial.md

|

||||

- Google Cloud (GCP): yolov5/environments/google_cloud_quickstart_tutorial.md

|

||||

- AzureML: yolov5/environments/azureml_quickstart_tutorial.md

|

||||

- Docker Image: yolov5/environments/docker_image_quickstart_tutorial.md

|

||||

- Tutorials:

|

||||

- Train Custom Data: yolov5/tutorials/train_custom_data.md

|

||||

|

||||

@ -214,7 +214,7 @@ def add_integration_callbacks(instance):

|

||||

callbacks_list.extend([clear_cb, comet_cb, dvc_cb, mlflow_cb, neptune_cb, tune_cb, tb_cb, wb_cb])

|

||||

|

||||

# Load export callbacks (patch to avoid CoreML protobuf error)

|

||||

if 'Exporter' in instance.__class__.__name__ and instance.args.format in ('coreml', 'mlmodel'):

|

||||

if 'Exporter' in instance.__class__.__name__:

|

||||

from .tensorboard import callbacks as tb_cb

|

||||

callbacks_list.append(tb_cb)

|

||||

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user