mirror of

https://github.com/THU-MIG/yolov10.git

synced 2026-01-05 14:25:28 +08:00

ultralytics 8.0.173 fix missing val plots and new Conda Guide (#4783)

This commit is contained in:

parent

602022a56e

commit

dfe6dfb1d2

@ -1,34 +1,48 @@

|

||||

---

|

||||

comments: true

|

||||

description: Azure Machine Learning YOLOv8 quickstart

|

||||

keywords: Ultralytics, YOLO, Deep Learning, Object detection, quickstart, Azure, AzureML

|

||||

description: Step-by-step Quickstart Guide to Running YOLOv8 Object Detection Models on AzureML for Fast Prototyping and Testing

|

||||

keywords: Ultralytics, YOLOv8, Object Detection, Azure Machine Learning, Quickstart Guide, Prototype, Compute Instance, Terminal, Notebook, IPython Kernel, CLI, Python SDK

|

||||

---

|

||||

|

||||

# YOLOv8 🚀 on AzureML

|

||||

|

||||

Note that this guide is only for quick trials from a compute terminal or from a Notebook. If you want to unlock the full power AzureML, you can find the documentation to:

|

||||

## What is Azure?

|

||||

|

||||

- [Create a data asset](https://learn.microsoft.com/azure/machine-learning/how-to-create-data-assets)

|

||||

- [Create an AzureML job](https://learn.microsoft.com/azure/machine-learning/how-to-train-model)

|

||||

- [Register a model](https://learn.microsoft.com/azure/machine-learning/how-to-manage-models)

|

||||

- [Train YOLOv8 with the AzureML Python SDK](https://medium.com/@ouphi/how-to-train-the-yolov8-model-with-azure-machine-learning-python-sdk-8268696be8ba)

|

||||

- [Train YOLOv8 with the Azureml cli](https://medium.com/@ouphi/how-to-train-the-yolov8-model-with-azureml-and-the-az-cli-73d3c870ba8e)

|

||||

[Azure](https://azure.microsoft.com/) is Microsoft's cloud computing platform, designed to help organizations move their workloads to the cloud from on-premises data centers. With the full spectrum of cloud services including those for computing, databases, analytics, machine learning, and networking, users can pick and choose from these services to develop and scale new applications, or run existing applications, in the public cloud.

|

||||

|

||||

## What is Azure Machine Learning (AzureML)?

|

||||

|

||||

Azure Machine Learning, commonly referred to as AzureML, is a fully managed cloud service that enables data scientists and developers to efficiently embed predictive analytics into their applications, helping organizations use massive data sets and bring all the benefits of the cloud to machine learning. AzureML offers a variety of services and capabilities aimed at making machine learning accessible, easy to use, and scalable. It provides capabilities like automated machine learning, drag-and-drop model training, as well as a robust Python SDK so that developers can make the most out of their machine learning models.

|

||||

|

||||

## How Does AzureML Benefit YOLO Users?

|

||||

|

||||

For users of YOLO (You Only Look Once), AzureML provides a robust, scalable, and efficient platform to both train and deploy machine learning models. Whether you are looking to run quick prototypes or scale up to handle more extensive data, AzureML's flexible and user-friendly environment offers various tools and services to fit your needs. You can leverage AzureML to:

|

||||

|

||||

- Easily manage large datasets and computational resources for training.

|

||||

- Utilize built-in tools for data preprocessing, feature selection, and model training.

|

||||

- Collaborate more efficiently with capabilities for MLOps (Machine Learning Operations), including but not limited to monitoring, auditing, and versioning of models and data.

|

||||

|

||||

In the subsequent sections, you will find a quickstart guide detailing how to run YOLOv8 object detection models using AzureML, either from a compute terminal or a notebook.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You need an [AzureML workspace](https://learn.microsoft.com/azure/machine-learning/concept-workspace?view=azureml-api-2).

|

||||

Before you can get started, make sure you have access to an AzureML workspace. If you don't have one, you can create a new [AzureML workspace](https://learn.microsoft.com/azure/machine-learning/concept-workspace?view=azureml-api-2) by following Azure's official documentation. This workspace acts as a centralized place to manage all AzureML resources.

|

||||

|

||||

## Create a compute instance

|

||||

|

||||

From your AzureML workspace, select Compute > Compute instances > New, select the instance with the resources you need.

|

||||

|

||||

<img width="1741" alt="create-compute-arrow" src="https://github.com/ouphi/ultralytics/assets/17216799/3e92fcc0-a08e-41a4-af81-d289cfe3b8f2">

|

||||

<p align="center">

|

||||

<img width="1280" src="https://github.com/ouphi/ultralytics/assets/17216799/3e92fcc0-a08e-41a4-af81-d289cfe3b8f2" alt="Create Azure Compute Instance">

|

||||

</p>

|

||||

|

||||

## Quickstart from Terminal

|

||||

|

||||

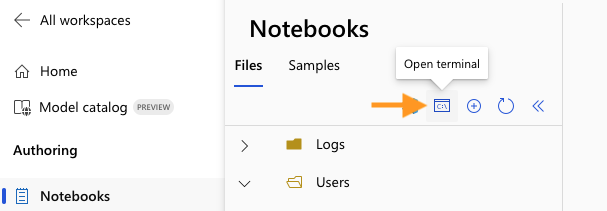

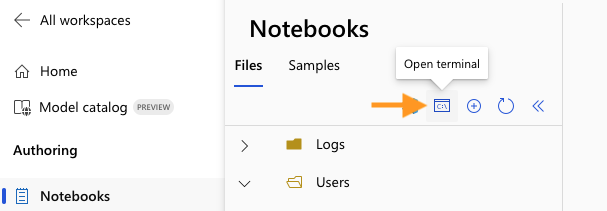

Start your compute and open a Terminal:

|

||||

|

||||

|

||||

<p align="center">

|

||||

<img width="480" src="https://github.com/ouphi/ultralytics/assets/17216799/635152f1-f4a3-4261-b111-d416cb5ef357" alt="Open Terminal">

|

||||

</p>

|

||||

|

||||

### Create virtualenv

|

||||

|

||||

@ -71,7 +85,9 @@ You can find more [instructions to use the Ultralytics cli here](https://docs.ul

|

||||

|

||||

Open the compute Terminal.

|

||||

|

||||

|

||||

<p align="center">

|

||||

<img width="480" src="https://github.com/ouphi/ultralytics/assets/17216799/635152f1-f4a3-4261-b111-d416cb5ef357" alt="Open Terminal">

|

||||

</p>

|

||||

|

||||

From your compute terminal, you need to create a new ipykernel that will be used by your notebook to manage your dependencies:

|

||||

|

||||

@ -120,3 +136,17 @@ metrics = model.val() # evaluate model performance on the validation set

|

||||

results = model("https://ultralytics.com/images/bus.jpg") # predict on an image

|

||||

path = model.export(format="onnx") # export the model to ONNX format

|

||||

```

|

||||

|

||||

You can use either the Ultralytics CLI or Python interface for running YOLOv8 tasks, as described in the terminal section above.

|

||||

|

||||

By following these steps, you should be able to get YOLOv8 running quickly on AzureML for quick trials. For more advanced uses, you may refer to the full AzureML documentation linked at the beginning of this guide.

|

||||

|

||||

## Explore More with AzureML

|

||||

|

||||

This guide serves as an introduction to get you up and running with YOLOv8 on AzureML. However, it only scratches the surface of what AzureML can offer. To delve deeper and unlock the full potential of AzureML for your machine learning projects, consider exploring the following resources:

|

||||

|

||||

- [Create a Data Asset](https://learn.microsoft.com/azure/machine-learning/how-to-create-data-assets): Learn how to set up and manage your data assets effectively within the AzureML environment.

|

||||

- [Initiate an AzureML Job](https://learn.microsoft.com/azure/machine-learning/how-to-train-model): Get a comprehensive understanding of how to kickstart your machine learning training jobs on AzureML.

|

||||

- [Register a Model](https://learn.microsoft.com/azure/machine-learning/how-to-manage-models): Familiarize yourself with model management practices including registration, versioning, and deployment.

|

||||

- [Train YOLOv8 with AzureML Python SDK](https://medium.com/@ouphi/how-to-train-the-yolov8-model-with-azure-machine-learning-python-sdk-8268696be8ba): Explore a step-by-step guide on using the AzureML Python SDK to train your YOLOv8 models.

|

||||

- [Train YOLOv8 with AzureML CLI](https://medium.com/@ouphi/how-to-train-the-yolov8-model-with-azureml-and-the-az-cli-73d3c870ba8e): Discover how to utilize the command-line interface for streamlined training and management of YOLOv8 models on AzureML.

|

||||

|

||||

132

docs/guides/conda-quickstart.md

Normal file

132

docs/guides/conda-quickstart.md

Normal file

@ -0,0 +1,132 @@

|

||||

---

|

||||

comments: true

|

||||

description: Comprehensive guide to setting up and using Ultralytics YOLO models in a Conda environment. Learn how to install the package, manage dependencies, and get started with object detection projects.

|

||||

keywords: Ultralytics, YOLO, Conda, environment setup, object detection, package installation, deep learning, machine learning, guide

|

||||

---

|

||||

|

||||

# Conda Quickstart Guide for Ultralytics

|

||||

|

||||

This guide provides a comprehensive introduction to setting up a Conda environment for your Ultralytics projects. Conda is an open-source package and environment management system that offers an excellent alternative to pip for installing packages and dependencies. Its isolated environments make it particularly well-suited for data science and machine learning endeavors. For more details, visit the Ultralytics Conda package on [Anaconda](https://anaconda.org/conda-forge/ultralytics) and check out the Ultralytics feedstock repository for package updates on [GitHub](https://github.com/conda-forge/ultralytics-feedstock/).

|

||||

|

||||

[](https://anaconda.org/conda-forge/ultralytics) [](https://anaconda.org/conda-forge/ultralytics) [](https://anaconda.org/conda-forge/ultralytics) [](https://anaconda.org/conda-forge/ultralytics)

|

||||

|

||||

<p align="center">

|

||||

<img width="1024" src="https://user-images.githubusercontent.com/26833433/266324397-32119e21-8c86-43e5-a00e-79827d303d10.png" alt="Ultralytics Conda Package Visual">

|

||||

</p>

|

||||

|

||||

## What You Will Learn

|

||||

|

||||

- Setting up a Conda environment

|

||||

- Installing Ultralytics via Conda

|

||||

- Initializing Ultralytics in your environment

|

||||

- Using Ultralytics Docker images with Conda

|

||||

|

||||

---

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You should have Anaconda or Miniconda installed on your system. If not, download and install it from [Anaconda](https://www.anaconda.com/products/distribution) or [Miniconda](https://docs.conda.io/en/latest/miniconda.html).

|

||||

|

||||

---

|

||||

|

||||

## Setting up a Conda Environment

|

||||

|

||||

First, let's create a new Conda environment. Open your terminal and run the following command:

|

||||

|

||||

```bash

|

||||

conda create --name ultralytics-env python=3.8 -y

|

||||

```

|

||||

|

||||

Activate the new environment:

|

||||

|

||||

```bash

|

||||

conda activate ultralytics-env

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## Installing Ultralytics

|

||||

|

||||

You can install the Ultralytics package from the conda-forge channel. Execute the following command:

|

||||

|

||||

```bash

|

||||

conda install -c conda-forge ultralytics

|

||||

```

|

||||

|

||||

### Note on CUDA Environment

|

||||

|

||||

If you're working in a CUDA-enabled environment, it's a good practice to install `ultralytics`, `pytorch`, and `pytorch-cuda` together to resolve any conflicts:

|

||||

|

||||

```bash

|

||||

conda install -c pytorch -c nvidia -c conda-forge pytorch torchvision pytorch-cuda=11.8 ultralytics

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## Using Ultralytics

|

||||

|

||||

With Ultralytics installed, you can now start using its robust features for object detection, instance segmentation, and more. For example, to predict an image, you can run:

|

||||

|

||||

```python

|

||||

from ultralytics import YOLO

|

||||

|

||||

model = YOLO('yolov8n.pt') # initialize model

|

||||

results = model('path/to/image.jpg') # perform inference

|

||||

results.show() # display results

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

## Ultralytics Conda Docker Image

|

||||

|

||||

If you prefer using Docker, Ultralytics offers Docker images with a Conda environment included. You can pull these images from [DockerHub](https://hub.docker.com/r/ultralytics/ultralytics).

|

||||

|

||||

Pull the latest Ultralytics image:

|

||||

|

||||

```bash

|

||||

# Set image name as a variable

|

||||

t=ultralytics/ultralytics:latest-conda

|

||||

|

||||

# Pull the latest Ultralytics image from Docker Hub

|

||||

sudo docker pull $t

|

||||

```

|

||||

|

||||

Run the image:

|

||||

|

||||

```bash

|

||||

# Run the Ultralytics image in a container with GPU support

|

||||

sudo docker run -it --ipc=host --gpus all $t # all GPUs

|

||||

sudo docker run -it --ipc=host --gpus '"device=2,3"' $t # specify GPUs

|

||||

```

|

||||

|

||||

---

|

||||

|

||||

Certainly, you can include the following section in your Conda guide to inform users about speeding up installation using `libmamba`:

|

||||

|

||||

---

|

||||

|

||||

## Speeding Up Installation with Libmamba

|

||||

|

||||

If you're looking to [speed up the package installation](https://www.anaconda.com/blog/a-faster-conda-for-a-growing-community) process in Conda, you can opt to use `libmamba`, a fast, cross-platform, and dependency-aware package manager that serves as an alternative solver to Conda's default.

|

||||

|

||||

### How to Enable Libmamba

|

||||

|

||||

To enable `libmamba` as the solver for Conda, you can perform the following steps:

|

||||

|

||||

1. First, install the `conda-libmamba-solver` package. This can be skipped if your Conda version is 4.11 or above, as `libmamba` is included by default.

|

||||

|

||||

```bash

|

||||

conda install conda-libmamba-solver

|

||||

```

|

||||

|

||||

2. Next, configure Conda to use `libmamba` as the solver:

|

||||

|

||||

```bash

|

||||

conda config --set solver libmamba

|

||||

```

|

||||

|

||||

And that's it! Your Conda installation will now use `libmamba` as the solver, which should result in a faster package installation process.

|

||||

|

||||

---

|

||||

|

||||

Congratulations! You have successfully set up a Conda environment, installed the Ultralytics package, and are now ready to explore its rich functionalities. Feel free to dive deeper into the [Ultralytics documentation](https://docs.ultralytics.com/) for more advanced tutorials and examples.

|

||||

@ -18,6 +18,7 @@ Here's a compilation of in-depth guides to help you master different aspects of

|

||||

* [Hyperparameter Tuning](hyperparameter-tuning.md) 🚀 NEW: Discover how to optimize your YOLO models by fine-tuning hyperparameters using the Tuner class and genetic evolution algorithms.

|

||||

* [Using YOLOv8 with SAHI for Sliced Inference](sahi-tiled-inference.md) 🚀 NEW: Comprehensive guide on leveraging SAHI's sliced inference capabilities with YOLOv8 for object detection in high-resolution images.

|

||||

* [AzureML Quickstart](azureml-quickstart.md) 🚀 NEW: Get up and running with Ultralytics YOLO models on Microsoft's Azure Machine Learning platform. Learn how to train, deploy, and scale your object detection projects in the cloud.

|

||||

* [Conda Quickstart](conda-quickstart.md) 🚀 NEW: Step-by-step guide to setting up a Conda environment for Ultralytics. Learn how to install and start using the Ultralytics package efficiently with Conda.

|

||||

|

||||

## Contribute to Our Guides

|

||||

|

||||

|

||||

@ -218,6 +218,7 @@ nav:

|

||||

- Hyperparameter Tuning: guides/hyperparameter-tuning.md

|

||||

- SAHI Tiled Inference: guides/sahi-tiled-inference.md

|

||||

- AzureML Quickstart: guides/azureml-quickstart.md

|

||||

- Conda Quickstart: guides/conda-quickstart.md

|

||||

- Integrations:

|

||||

- integrations/index.md

|

||||

- OpenVINO: integrations/openvino.md

|

||||

|

||||

@ -1,6 +1,6 @@

|

||||

# Ultralytics YOLO 🚀, AGPL-3.0 license

|

||||

|

||||

__version__ = '8.0.172'

|

||||

__version__ = '8.0.173'

|

||||

|

||||

from ultralytics.models import RTDETR, SAM, YOLO

|

||||

from ultralytics.models.fastsam import FastSAM

|

||||

|

||||

@ -571,6 +571,7 @@ class BaseTrainer:

|

||||

strip_optimizer(f) # strip optimizers

|

||||

if f is self.best:

|

||||

LOGGER.info(f'\nValidating {f}...')

|

||||

self.validator.args.plots = self.args.plots

|

||||

self.metrics = self.validator(model=f)

|

||||

self.metrics.pop('fitness', None)

|

||||

self.run_callbacks('on_fit_epoch_end')

|

||||

|

||||

@ -102,8 +102,8 @@ class BaseValidator:

|

||||

@smart_inference_mode()

|

||||

def __call__(self, trainer=None, model=None):

|

||||

"""

|

||||

Supports validation of a pre-trained model if passed or a model being trained

|

||||

if trainer is passed (trainer gets priority).

|

||||

Supports validation of a pre-trained model if passed or a model being trained if trainer is passed (trainer

|

||||

gets priority).

|

||||

"""

|

||||

self.training = trainer is not None

|

||||

augment = self.args.augment and (not self.training)

|

||||

|

||||

@ -58,7 +58,7 @@ class ClassificationTrainer(BaseTrainer):

|

||||

return model

|

||||

|

||||

def setup_model(self):

|

||||

"""load/create/download model for any task"""

|

||||

"""Load, create or download model for any task."""

|

||||

if isinstance(self.model, torch.nn.Module): # if model is loaded beforehand. No setup needed

|

||||

return

|

||||

|

||||

@ -131,13 +131,13 @@ class ClassificationTrainer(BaseTrainer):

|

||||

for f in self.last, self.best:

|

||||

if f.exists():

|

||||

strip_optimizer(f) # strip optimizers

|

||||

# TODO: validate best.pt after training completes

|

||||

# if f is self.best:

|

||||

# LOGGER.info(f'\nValidating {f}...')

|

||||

# self.validator.args.save_json = True

|

||||

# self.metrics = self.validator(model=f)

|

||||

# self.metrics.pop('fitness', None)

|

||||

# self.run_callbacks('on_fit_epoch_end')

|

||||

if f is self.best:

|

||||

LOGGER.info(f'\nValidating {f}...')

|

||||

self.validator.args.data = self.args.data

|

||||

self.validator.args.plots = self.args.plots

|

||||

self.metrics = self.validator(model=f)

|

||||

self.metrics.pop('fitness', None)

|

||||

self.run_callbacks('on_fit_epoch_end')

|

||||

LOGGER.info(f"Results saved to {colorstr('bold', self.save_dir)}")

|

||||

|

||||

def plot_training_samples(self, batch, ni):

|

||||

|

||||

Loading…

x

Reference in New Issue

Block a user